WA2. Designing a Rational Vacuum Cleaner Agent¶

Statement¶

In this assignment, you will be designing a Rational Agent which cleans a room with minimum possible actions and the goal is the entire environment is clean and agent back to home (starting location A).

Part A:

- In Part A, you need to identify the PEAS (Performance Measure, Environment, Actuators, and Sensors).

Part B:

- You are required to write pseudo-code in Java or Python to implement this Rational Agent.

- Your pseudo-code must represent the following:

- Implementation of the static environment. You need to define array or some other data structure which will represent the location (A to P)

- A function/method to determine what action to take. Decision should be Move (Which direction), suck dirt, or Go back Home.

- A function/method to determine which direction to go.

- A function/method to identify the route and navigate to home from the current location.

- A function/method to test if the desired goal is achieved or not.

Part c:

- Here, you are required to write 1-page minimum summary focusing on the following thoughts:

- Your lessons learned from doing this assignment.

- Do you think your algorithm should be able to reach the goal with given energy points (100)?

- What changes do you need to make if your static location gets bigger?

- The real world is absurdly complex, for a smart home vacuum cleaner need to consider obstacles like furniture, human or pet. What changes you need to make to handle these obstacles.

Information¶

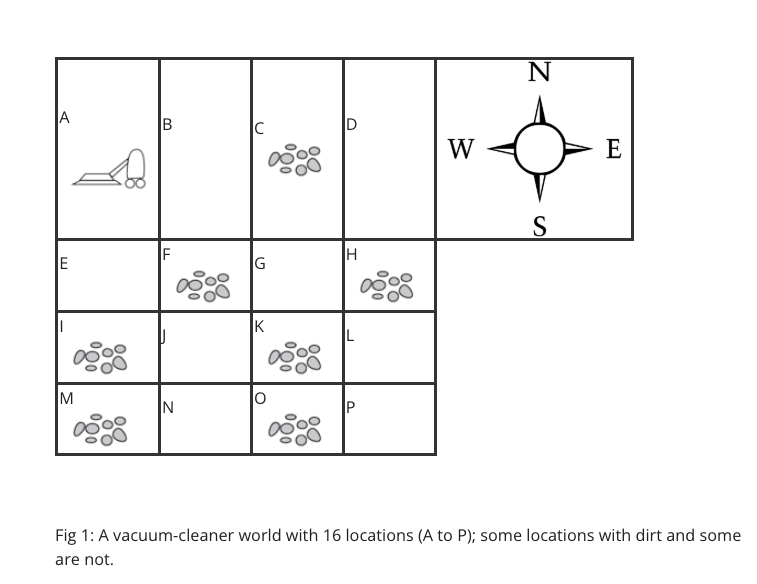

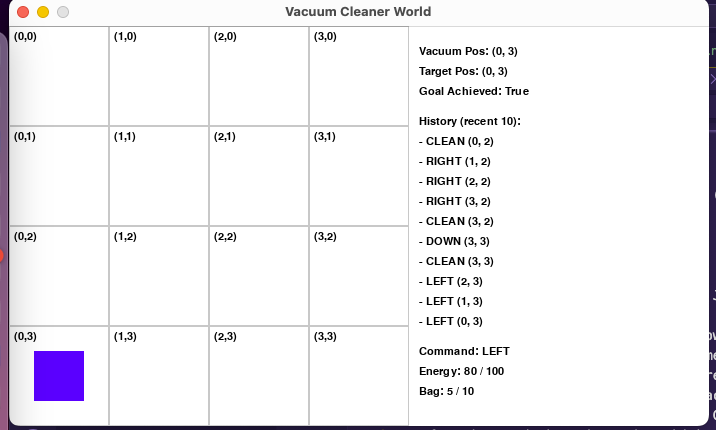

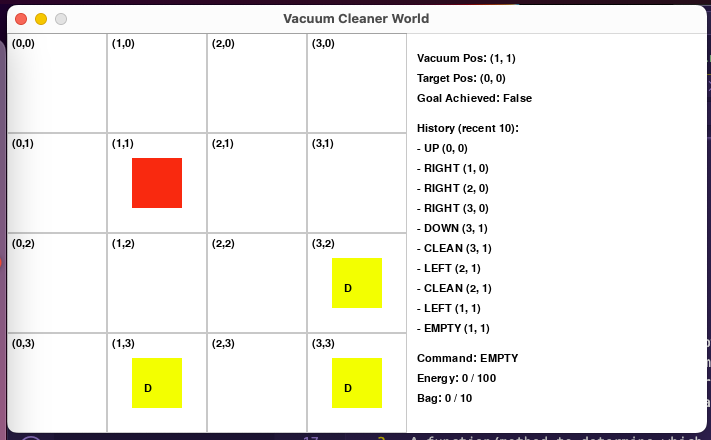

- Below is an example of simple 4*4 world.

| Image 1: 4*4 world |

|---|

|

- Prior Knowledge::

- The entire environment is divided by 4 by 4 square location.

- The agent (vacuum cleaner) has an initial energy of 100 points.

- The agent can move only North, South, East, or West. It can’t move diagonally.

- Each action cost 1 energy point. For example, each move cost 1 energy, each suck cost 1 energy.

- The agent has a bag that collects dirt. The maximum capacity is 10.

- After each suck, the agent needs to check its bag, if full then go back to Home (location A) and self-empty the bag and start vacuuming again.

- You will be graded on the following:

- Part A, the PEAS is defined correctly for a Vacuum cleaner agent? (20 points)

- Part B, the data structure is appropriate for such an environment? (10 points)

- Part B, the pseudo-code is clearly defined for what action to take in different scenarios? (30 points)

- Part B, the pseudo-code is clearly defined for whether the agent knows a goal state is achieved or not? (10 points)

- Part B, the calculation for energy and bag capacity (full yet?) is done correctly? For example, does the agent know when to head back home for self-empty the bag? (10 points)

- Part C, all of the questions clearly answered in a minimum 1-page summary? (20 points)

Table of Contents¶

This text will answer each part of the assignment separately, and then include the full source code at the end.

- WA2. Designing a Rational Vacuum Cleaner Agent

- Statement

- Information

- Table of Contents

- Part A: PEAS

- Part B: Pseudo-code

- Part C: Summary

- Your lessons learned from doing this assignment

- Do you think your algorithm should be able to reach the goal with given energy points (100)?

- What changes do you need to make if your static location gets bigger?

- The real world is absurdly complex, for a smart home vacuum cleaner need to consider obstacles like furniture, human or pet. What changes you need to make to handle these obstacles

- Grading Criteria

- Part A, the PEAS is defined correctly for a Vacuum cleaner agent? (20 points)

- Part B, the data structure is appropriate for such an environment? (10 points)

- Part B, the pseudo-code is clearly defined for what action to take in different scenarios? (30 points)

- Part B, the pseudo-code is clearly defined for whether the agent knows a goal state is achieved or not? (10 points)

- Part B, the calculation for energy and bag capacity (full yet?) is done correctly? For example, does the agent know when to head back home for self-empty the bag? (10 points)

- Part C, all of the questions clearly answered in a minimum 1-page summary? (20 points)

- Source Code Explanation

- Source Code

Part A: PEAS¶

The Performance of a self-vacuum cleaner can be measured by the cleanliness of the room (keep the room clean), efficiency (use less energy and move faster), safety (avoid obstacles and accidents), and mobility (move around or climb stairs). The Environment includes the house and its interiors. The Actuators include wheels, vacuum, and brushes. The Sensors include cameras, dirt detectors, cliff sensors, bump sensors, and wall sensors.

The environment is dynamic (environment changes as other agents can move around such as pets or humans), partially observable (agent can only see up to the limits of its sensors), multi-agent (other agents can be present), stochastic (the environment is unpredictable as other actors can add more dirt or obstacles), continuous (the agent can move in any direction), and unknown (the designer of the agent does not know the exact layout of the house before hand, and layout can change).

Part B: Pseudo-code¶

- I used

PyGameto visualize the agent’s movement in the environment. - The source code is available at the end of this text.

- You may need to install

PyGameusingpip install pygame. -

If do want to install anything, you can comment out the the the following lines in the source code (in the

mainfunction) and/or delete theVisualWorldclass:world = VisualWorld(state) # line 349 world.visualize() # line 378 -

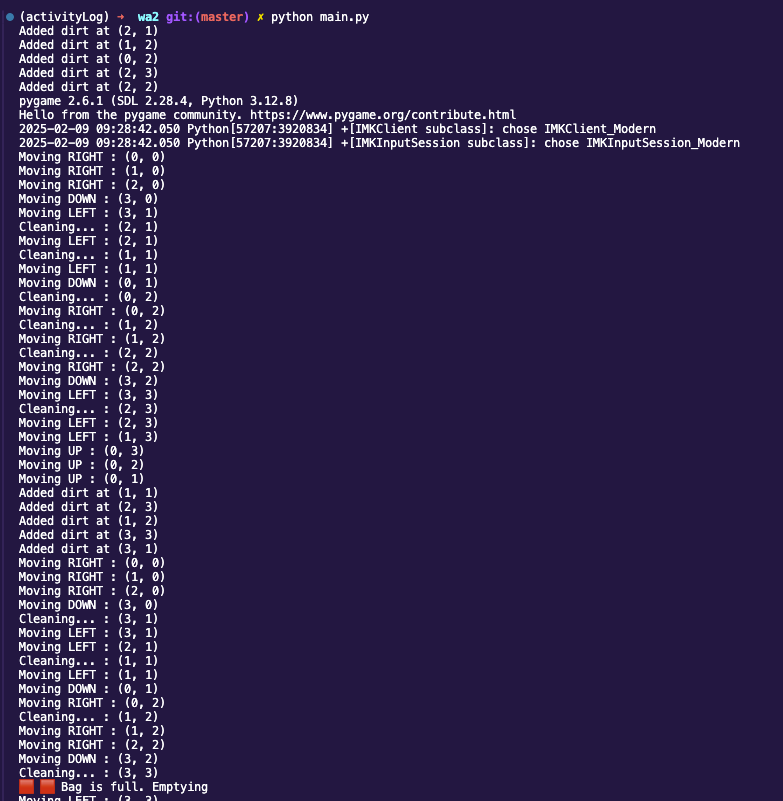

If you don’t want to visualize the agent’s movement, you can can still follow the logs:

| Image 2: Logs of the agent’s movement in the environment |

|---|

|

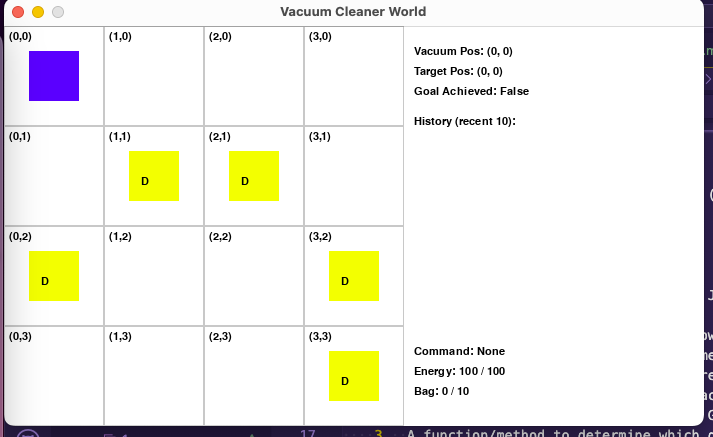

- The world will start at the following state, according to the prior knowledge in the prompt:

- Vacuum cleaner at location

[0,0]. - A few dirty places that are randomly placed.

- Energy points at

100. - Bag capacity at

10, and it is empty. - The agent can only move North, South, East, or West corresponding to

Up, Down, Right, and Leftrespectively. - Goal achieved is

Falseas there are some dirty places left. - Blue square is the vacuum cleaner.

- Yellow squares with

Dare dirty places. - Every second represent a cycle.

- Vacuum cleaner at location

| Image 3: Initial state of the world |

|---|

|

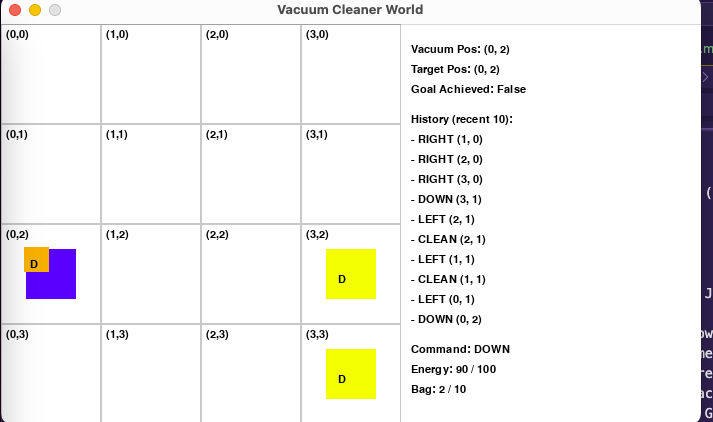

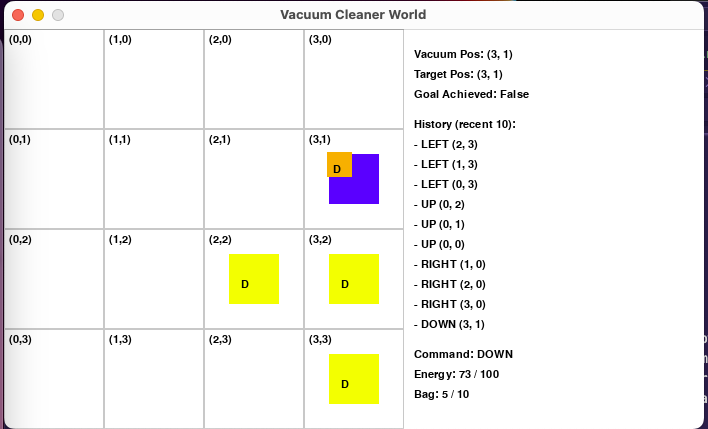

- The agent starts moving tile by tile, going right first, and then down, as shown in the following images:

- If it finds a dirty place, the color of the dirt will change to orange, and it will be sucked in the next cycle (if the circumstances allow).

| Image 4: Agent moving around |

|---|

|

- The agent will keep following its path until it reaches the final tile, and then goes back to the starting location:

- The

Goal Achievedis nowTrue. - Notice the history of the most 10 recent commands to the right of the world.

- The

| Image 5: Agent moving back to the starting location |

|---|

|

- The cycle does not end, as I keep generating dirt at random places every 10 seconds:

- Then agent will do another round after 10 seconds.

- The agent will keep doing rounds until its energy is 0 or the user closes the window.

| Image 6: Agent moving around after the first round |

|---|

|

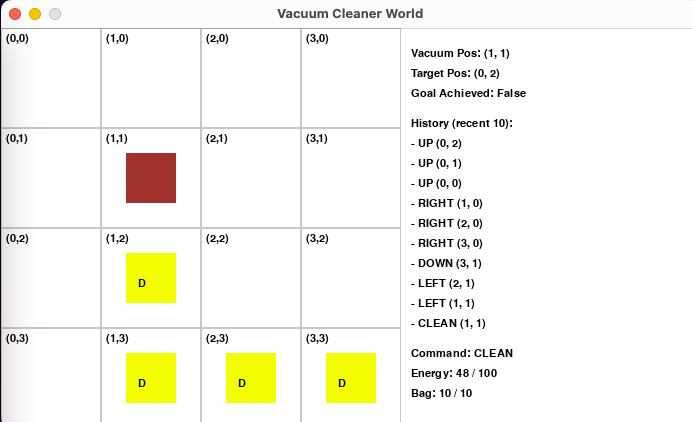

- When the bag is empty, the agent will empty it and then continue vacuuming:

- The agent color will change to

brownwhen its bag is full. - It will go back to the starting location to empty the bag.

- It will go back to the previous location to continue vacuuming.

- The agent color will change to

| Image 7: Agent bag if full, going back to empty |

|---|

|

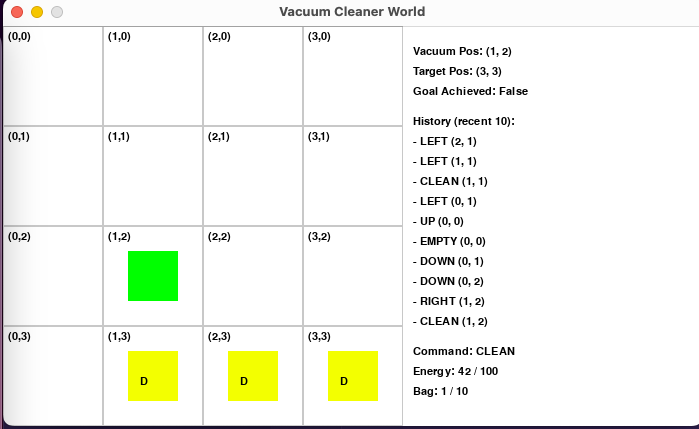

- After the agent has sucked a dirt, it will tell you that it can suck more by turning into

greencolor temporarily:

| Image 8: Agent signals that its bag is not full |

|---|

|

- Once the agent runs out of energy, it can’t do anything, and the world will stop:

- The agent will stop at the last location it was in.

- The agent’s color will change to

red.

| Image 9: Agent stops when it runs out of energy |

|---|

|

Part C: Summary¶

Your lessons learned from doing this assignment¶

Do you think your algorithm should be able to reach the goal with given energy points (100)?¶

- Yes, I tested that by keeping the model running until the agent runs out of energy, and it was able to do 2-3 rounds, that is, achieved its goal of cleaning all tiles and going back to the starting location.

- See Image 9 above.

What changes do you need to make if your static location gets bigger?¶

- The code that I wrote allows for the

rowsandcolsto be supplied through thestatedictionary. - To make the static location bigger, you only need to increase the

rowsandcolsin thestatedictionary. - The ability for the agent to achieve its goal may be affected by the size of the environment, as the agent may run out of energy before it can clean all the tiles.

The real world is absurdly complex, for a smart home vacuum cleaner need to consider obstacles like furniture, human or pet. What changes you need to make to handle these obstacles¶

- My code does not handle obstacles like furniture, humans, or pets.

- The

get_direction_for_next_movefunction can be modified as follows:- Check what is in the next tile before moving.

- If there is an obstacle in the next expected tile, the agent should decide to move in another direction hat is perpendicular to the obstacle.

- If the agent was originally moving horizontally, it should move vertically by one tile, and go back to the original direction.

- You may need to keep track of the direction and coordinates of the place you were heading before the obstacle, so you can go back on track.

- There is a different approach that relies on the updating the moving plan in the

one_roundfunction:- This function plans the agent’s movement in a round, and it can be updated to include the obstacle avoidance logic.

- If obstacles are permanent and not moving, the plan can be loaded on the state, and the agent can follow the updated plan without recalculating it every time.

- This approach requires more user input, and is less scalable.

Grading Criteria¶

Part A, the PEAS is defined correctly for a Vacuum cleaner agent? (20 points)¶

Part A is answered above. See Part A: PEAS.

Part B, the data structure is appropriate for such an environment? (10 points)¶

Here is the data structure used in the code:

state = {

"vacuum_pos": {"x": 0, "y": 0},

"cols": 4,

"rows": 4,

"dirtyTiles": [{"name": "D", "x": 1, "y": 1}],

"history": [],

"current_command": None,

"energy": 100,

"energy_capacity": 100,

"bag": 0,

"bag_capacity": 10,

"target_pos": {"x": 0, "y": 0},

"goal_achieved": False

}

Part B, the pseudo-code is clearly defined for what action to take in different scenarios? (30 points)¶

- There is a variable

state.current_commandthat keeps track of the current action. - Possible commands:

CLEAN: to suck the dirt from the current tile.UP,DOWN,LEFT,RIGHT: to move the agent in the corresponding direction.EMPTY: to empty the bag.

- The

state.historycan always be inspected to investigate the agent’s movement and actions. - Below is the source code that defines and chooses the different actions:f

def decide_direction_for_next_move(state, target_pos):

"""Decide the direction for the next move."""

vacuum_pos = state['vacuum_pos']

vacuum_x = vacuum_pos['x']

vacuum_y = vacuum_pos['y']

target_x = target_pos['x']

target_y = target_pos['y']

direction = None

if vacuum_x < target_x:

direction = "RIGHT"

elif vacuum_x > target_x:

direction = "LEFT"

elif vacuum_y < target_y:

direction = "DOWN"

elif vacuum_y > target_y:

direction = "UP"

return direction

def suck(state):

"""Suck the dirt at the current position."""

vacuum_pos = state['vacuum_pos']

vacuum_x = vacuum_pos['x']

vacuum_y = vacuum_pos['y']

if is_dirty(state, vacuum_pos):

print(f"Cleaning... : ({vacuum_x}, {vacuum_y})")

state['history'].append((vacuum_pos, "CLEAN"))

state['current_command'] = "CLEAN"

state['energy'] -= 1

state['bag'] += 1

state['dirtyTiles'] = [

item for item in state['dirtyTiles'] if item['x'] != vacuum_x or item['y'] != vacuum_y]

time.sleep(1)

if bag_full(state):

print("🟥 🟥 Bag is full. Emptying")

empty_bag(state, state['target_pos'])

def empty_bag(state, target_pos):

"""Empty the bag."""

vacuum_pos = state['vacuum_pos']

go_to(state, {'x': 0, 'y': vacuum_pos['y']}) # go to x=0

go_to(state, {'x': 0, 'y': 0}) # go to y=0

state['bag'] = 0

print("🧳 Bag is empty.")

state['current_command'] = "EMPTY"

state['history'].append((state['vacuum_pos'], "EMPTY"))

time.sleep(3)

go_to(state, target_pos)

Part B, the pseudo-code is clearly defined for whether the agent knows a goal state is achieved or not? (10 points)¶

- The

state.goal_achievedis set toTruewhen the agent has cleaned all the dirty tiles,Falseotherwise. - The state is checked after executing every command using the following code:

state['goal_achieved'] = len(state['dirtyTiles']) == 0

Part B, the calculation for energy and bag capacity (full yet?) is done correctly? For example, does the agent know when to head back home for self-empty the bag? (10 points)¶

- We check for energy left and bag capacity after every action/command.

- The checking of

no_energyandbag_fullis done in the following functions:

def no_energy(state):

"""Check if the energy is less than 0."""

return state['energy'] <= 0

def bag_full(state):

"""Check if the bag is full."""

return state['bag'] >= state['bag_capacity']

Part C, all of the questions clearly answered in a minimum 1-page summary? (20 points)¶

Part C is answered above. See Part C: Summary.

Source Code Explanation¶

- The entry point is

mainfunction. - We start by initializing the state of the world, and then instantiate the

VisualWorldclass to visualize the agent’s movement. - This step is optional, and you can comment it out if you don’t want to install

PyGame. - Every function has some comments for important information.

- The

go_toexecutes multiple agent moves, and after every move we do necessary checks to determine the next action/command. - The

one_roundfunction ensures that the vacuum cleaner visits every tile in the environment, and do appropriate actions accordingly. - The

simulate_updatesfunction ensures that visuals are redrawn after every cycle, and it usesthreadingto handle the updates in parallel. - The source code included below does not include the

VisualWorldclass, to keep it simpler; however, the entire source is code is attached inmain.pyfile.

Source Code¶

import sys

import time

def is_dirty(state, target_pos):

"""Check if the target position has a dirty item."""

for item in state['dirtyTiles']:

if item['x'] == target_pos['x'] and item['y'] == target_pos['y']:

return item['name'] == "D"

return False

def is_vacuum_in_position(state, target_pos):

"""Check if the vacuum is in the target position."""

return state['vacuum_pos']['x'] == target_pos['x'] and state['vacuum_pos']['y'] == target_pos['y']

def no_energy(state):

"""Check if the energy is less than 0."""

return state['energy'] <= 0

def bag_full(state):

"""Check if the bag is full."""

return state['bag'] >= state['bag_capacity']

def bag_can_fit(state):

"""Check if the item can fit in the bag."""

return state['bag'] < state['bag_capacity']

def decide_direction_for_next_move(state, target_pos):

"""Decide the direction for the next move."""

vacuum_pos = state['vacuum_pos']

vacuum_x = vacuum_pos['x']

vacuum_y = vacuum_pos['y']

target_x = target_pos['x']

target_y = target_pos['y']

direction = None

if vacuum_x < target_x:

direction = "RIGHT"

elif vacuum_x > target_x:

direction = "LEFT"

elif vacuum_y < target_y:

direction = "DOWN"

elif vacuum_y > target_y:

direction = "UP"

return direction

def move(state, direction):

"""Move the vacuum in the direction."""

vacuum_pos = state['vacuum_pos']

state['goal_achieved'] = len(state['dirtyTiles']) == 0

vacuum_x = vacuum_pos['x']

vacuum_y = vacuum_pos['y']

next_x = vacuum_x

next_y = vacuum_y

if direction == "UP" and vacuum_y > 0:

next_y = vacuum_y - 1

elif direction == "DOWN" and vacuum_y < state['rows'] - 1:

next_y = vacuum_y + 1

elif direction == "LEFT" and vacuum_x > 0:

next_x = vacuum_x - 1

elif direction == "RIGHT" and vacuum_x < state['cols'] - 1:

next_x = vacuum_x + 1

next_pos = {'x': next_x, 'y': next_y}

state['vacuum_pos'] = next_pos

state['current_command'] = direction

state['history'].append((next_pos, direction))

state['energy'] -= 1

print(f"Moving {direction} : ({vacuum_x}, {vacuum_y})")

time.sleep(1)

def suck(state):

"""Suck the dirt at the current position."""

vacuum_pos = state['vacuum_pos']

vacuum_x = vacuum_pos['x']

vacuum_y = vacuum_pos['y']

if is_dirty(state, vacuum_pos):

print(f"Cleaning... : ({vacuum_x}, {vacuum_y})")

state['history'].append((vacuum_pos, "CLEAN"))

state['current_command'] = "CLEAN"

state['energy'] -= 1

state['bag'] += 1

state['dirtyTiles'] = [

item for item in state['dirtyTiles'] if item['x'] != vacuum_x or item['y'] != vacuum_y]

time.sleep(1)

if bag_full(state):

print("🟥 🟥 Bag is full. Emptying")

empty_bag(state, state['target_pos'])

def empty_bag(state, target_pos):

"""Empty the bag."""

vacuum_pos = state['vacuum_pos']

go_to(state, {'x': 0, 'y': vacuum_pos['y']}) # go to x=0

go_to(state, {'x': 0, 'y': 0}) # go to y=0

state['bag'] = 0

print("🧳 Bag is empty.")

state['current_command'] = "EMPTY"

state['history'].append((state['vacuum_pos'], "EMPTY"))

time.sleep(3)

go_to(state, target_pos)

def do_actions_after_move(state, target_pos):

"""Check if the action is valid."""

vacuum_pos = state['vacuum_pos']

state['goal_achieved'] = len(state['dirtyTiles']) == 0

if no_energy(state):

print("🪫 No energy left. Cannot perform any action.")

elif is_dirty(state, vacuum_pos) and bag_can_fit(state):

suck(state)

time.sleep(1)

def go_to(state, target_pos):

"""Update the vacuum position in the state based on the direction."""

target_x = target_pos['x']

target_y = target_pos['y']

state['target_pos'] = target_pos

state['goal_achieved'] = len(state['dirtyTiles']) == 0

if no_energy(state):

print(f"No energy left. Cannot move to ({target_x}, {target_y}).")

return

# strategy is to move in the x direction first, then in the y direction

while state['vacuum_pos']['x'] != target_x or state['vacuum_pos']['y'] != target_y:

if no_energy(state):

print("No energy left.")

return

else:

direction = decide_direction_for_next_move(state, target_pos)

move(state, direction)

do_actions_after_move(state, target_pos)

def put_dirt(state):

"""Add 3-5 Dirty dirtyTiles to the state at random positions."""

import random

rows, cols = state['rows'], state['cols']

tiles = rows * cols

num_items = random.randint(

max(3, tiles // 4), min(5, tiles // 3)

)

for _ in range(num_items):

x = random.randint(1, cols - 1)

y = random.randint(1, rows - 1)

# generate new position if the position is already occupied or vacuum_pos

while any(item['x'] == x and item['y'] == y for item in state['dirtyTiles']) or (state['vacuum_pos']['x'] == x and state['vacuum_pos']['y'] == y):

x = random.randint(0, cols - 1)

y = random.randint(0, rows - 1)

state['dirtyTiles'].append({"name": "D", "x": x, "y": y})

print(f"Added dirt at ({x}, {y})")

def main():

state = {

"vacuum_pos": {"x": 0, "y": 0},

"cols": 4,

"rows": 4,

"dirtyTiles": [{"name": "D", "x": 1, "y": 1}],

"history": [],

"current_command": None,

"energy": 100,

"energy_capacity": 100,

"bag": 0,

"bag_capacity": 10,

"target_pos": {"x": 0, "y": 0},

"goal_achieved": False

}

put_dirt(state)

world = VisualWorld(state)

# # Simulate updates to the state in a separate thread or loop

def one_round():

if no_energy(state):

return

go_to(state, {'x': 3, 'y': 1})

go_to(state, {'x': 0, 'y': 2})

go_to(state, {'x': 3, 'y': 3})

go_to(state, {'x': 0, 'y': 3})

go_to(state, {'x': 0, 'y': 0})

def simulate_updates():

stop = False

time.sleep(10)

while not stop:

if not no_energy(state):

one_round()

time.sleep(5)

put_dirt(state)

time.sleep(5)

else:

stop = True

print("👋 👋 No energy left. Stopping the simulation.")

# # Run updates and visualization in parallel

import threading

update_thread = threading.Thread(target=simulate_updates)

update_thread.start()

world.visualize()

if __name__ == "__main__":

main()