1. Fundamentals of AI¶

Introduction 6¶

- Intelligence can be defined as the ability to learn and solve problems. To be exact, it is the capacity to solve problems, the ability to act rationally, and the ability to act like humans.

- Now, Artificial Intelligence can be defined into the following four categories.

- Thinking humanly.

- Thinking rationally.

- Acting humanly.

- Acting rationally.

- Turing Test:

- It is a thought experiment that was proposed by Alan Turing in 1950.

- The test involves a human being communicating via a teletype with an unknown party that might be either another person or a computer.

- If a computer at the other end is sufficiently able to respond in a human-like way, it may fool the human into thinking it is another person.

- The Chinese Room Argument:

- It is a thought experiment that was first presented by John Searle in 1980.

- Searle asks us to consider a person who does not speak Chinese who is sitting in a locked room. Inside the room are boxes filled with Chinese characters. The person cannot read Chinese and so he doesn’t know what the characters mean. However the person has a book in the room and the book provides rules, that the person does understand, that will allow him to take any message written in Chinese letters and slipped under the door, identify the appropriate response, and slip it back under the door.

- The 3 robot laws:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Artificial Intelligence and Agents 1¶

1.1 What is Artificial Intelligence?¶

- Artificial intelligence, or AI, is the field that studies the synthesis and analysis of computational agents that act intelligently.

- An agent is something that acts in an environment; it does something.

- An agent is judged solely by how it acts. Agents that have the same effect in the world are equally good.

- A computational agent is an agent whose decisions about its actions can be explained in terms of computation.

- All agents are limited. No agent is omniscient (all knowing) or omnipotent (can do anything).

- The central scientific goal of AI is to understand the principles that make intelligent behavior possible in natural or artificial systems.

- The central engineering goal of AI is the design and synthesis of agents that act intelligently, which leads to useful artifacts.

- AI systems are often in human-in-the-loop mode, where humans and agents work together to solve problems.

- AI is

realintelligence created artificially. - Human society viewed as an agent is arguably the most intelligent agent known.

- Three main sources for human intelligence:

- Biology.

- Culture.

- Lifelong learning.

1.2 A Brief History of Artificial Intelligence¶

- Hobbes (1588-1679) is the “Grandfather of AI,” espoused the position that thinking was symbolic reasoning, like talking out loud or working out an answer with pen and paper.

- Babbage (1792-1871) designed the first general-purpose computer, the Analytical Engine.

- Turing Machine and Lambda Calculus are the two most important theoretical models of computation. No one has built a machine that has carried out computation that cannot be computed by a Turing machine.

- Samuel built a checkers program in 1952 and implemented a program that learns to play checkers in the late 1950s.

- McCulloch and Pitts [1943] showed how a simple thresholding “formal neuron” could be the basis for a Turing-complete machine; hence, the first model of a neural network.

- ImageNet is a large database of 3 million images labelled in 500 categories.

- Next advances included Generative Adversarial Networks (GANs) and transformers which are the basis of generative AI.

- Machine learning included techniques such as neural networks, decision trees, logistic regression, and gradient-boosted trees.

- Planning in both deterministic actions and under uncertainty is a key area of AI research. It is supported by techibuqyes such as Markov Decision Processes (MDPs), dynamic programming, decision-theoretic planning, reinforcement learning, temporal difference learning, and Q-learning.

- AI requires formalizing concepts of causality, ability, and knowledge.

- Probabilities were eschewed in AI, because of the number of parameters required, until the breakthrough of Bayesian networks (belief networks) and graphical models, which exploit conditional independence, and form a basis for modeling causality. Combining first-order logic and probability is the topic of statistical relational AI.

1.3 Agents Situated in Environments¶

- AI is about practical reasoning: reasoning in order to do something.

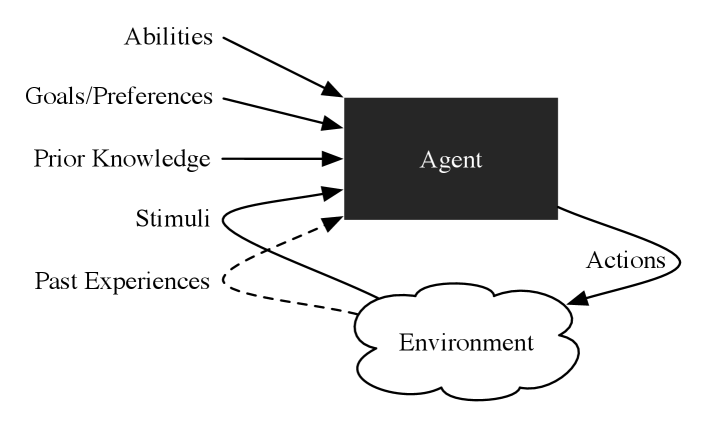

- A coupling of perception, reasoning, and acting comprises an agent.

- An agent acts in an environment.

- An agent’s environment often includes other agents.

- An agent together with its environment is called a world.

- An autonomous agent is one that acts in the world without human intervention.

- A semi-autonomous agent acts with a human-in-the-loop who may provide perceptual information and carry out the task.

- An agent could be a program that acts in a purely computational environment, a software agent, often called a bot.

- Inside the black box, an agent has a belief state that can encode beliefs about its environment, what it has learned, what it is trying to do, and what it intends to do.

- An agent updates this internal state based on stimuli. It uses the belief state and stimuli to decide on its actions.

- Purposive agents have preferences or goals.

- The non-purposive agents are grouped together and called nature.

- The preferences of an agent are often the preferences of the designer of the agent, but sometimes an agent can acquire goals and preferences at run time.

1.4 Prototypical Applications¶

- An Autonomous Delivery and Helping Robot.

- A Diagnostic Assistant.

- A Tutoring Agent.

- A Trading Agent.

- A Smart Home.

- Each model has the following”

- Prior knowledge.

- Past experience.

- Preferences.

- Stimuli.

1.5 Agent Design Space¶

- 10 dimensions of complexity in the design of intelligent agents:

- Modularity.

- Planning Horizon.

- Representation.

- Computational Limits.

- Learning.

- Uncertainty.

- Preferences.

- Number of Agents.

- Interactivity.

- Interaction of the Dimensions.

- These dimensions may be considered separately but must be combined to build an intelligent agent.

- These dimensions define a design space for AI; different points in this space are obtained by varying the values on each dimension.

- 1- Modularity:

- It is the extent to which a system can be decomposed into interacting modules that can be understood separately.

- It is important for reducing complexity and for reusability.

- In the modularity dimension, an agent’s structure is one of the following:

- Flat.

- Modular: system is decomposed into interacting modules.

- Hierarchical: modules are decomposed into submodules with a tree structure.

- 2- Planning Horizon:

- It is how far ahead in time the agent plans.

- The time points considered by an agent when planning are called stages.

- Non-planning agent: It is an agent that does not consider the future when it decides what to do or when time is not involved.

- Finite horizon: Greedy or myopic agents that plan only a fixed number of stages ahead (usually one).

- Indefinite horizon: Agents plan for a few stages ahead of time, but the number of stages is not fixed; it depends on the situation.

- Infinite horizon: Agents plan ongoing forever, and they are called process. Example: stabilization process of a robot (it needs to keep thinking to maintain balance so the robot does not fall).

- Each module may have a different planning horizon.

- 3- Representation:

- It is concerned with how the world is described to the agent.

- he different ways the world could be are called states.

- A state of the world specifies the agent’s internal state (its belief state) and the environment state.

- A state may be described in terms of features, where a feature has a value in each state.

- A proposition is a Boolean feature, which means that its value is either

true or false. Thirty propositions can encode 2^30 = 1,073,741,824 states. - When describing a complex world, the features can depend on relations and individuals.

- An individual is also called a thing, an object, or an entity. A relation on a single individual is a property.

- There is a feature for each possible relationship among the individuals.

- By reasoning in terms of relations and individuals, an agent can reason about whole classes of individuals without ever enumerating the features or propositions, let alone the states.

- 4- Computational Limits:

- Often there are computational resource limits that prevent an agent from carrying out the best action.

- The computational limits dimension determines whether an agent has:

- Perfect rationally: agent reasons about the best action without taking into account computational limits.

- Bounded rationally: agent reasons about the best action within its computational limits.

- Computational resource limits include computation time, memory, and numerical accuracy caused by computers not representing real numbers exactly.

- An anytime algorithm is an algorithm where the solution quality improves with time; it can produce its current best solution at any time, but given more time it could produce even better solutions.

- 5- Learning:

- The learning dimension determines whether:

- Knowledge is given to the agent.

- Knowledge is learned: from prior knowledge or past experience.

- The learning dimension determines whether:

- 6- Uncertainty:

- An agent could assume there is no uncertainty, or it could take uncertainty in the domain into consideration.

- Uncertainty is divided into two dimensions:

- Uncertainty from sensing.

- Uncertainty about the effects of actions.

- The sensing uncertainty dimension concerns whether the agent can determine the state from the stimuli:

- Fully observable: the agent knows the state of the world from the stimuli.

- Partially observable: the agent does not directly observe the state of the world. This occurs when many possible states can result in the same stimuli or when stimuli are misleading.

- The dynamics in the effect uncertainty dimension can be:

- Deterministic: the resulting state of the world after an action is determined by the action and the prior state.

- Stochastic: there is a probability distribution over the resulting states. E.g., a teacher explains a concept to a student, there are probability that the student may or may not understand the concept.

- 7- Preferences:

- Agents normally act to have better outcomes. The only reason to choose one action over another is because the preferred action leads to more desirable outcomes.

- The preference dimension considers whether the agent has goals or richer preferences:

- A goal:

- Achievement goal: a proposition to be true in the final state. E.g., a robot must reach a goal location.

- Maintenance goal: a proposition to be true in all states. E.g., a robot must maintain balance.

- Complex preferences: involve trade-offs among the desirability of various outcomes, perhaps at different times.

- Ordinal preference: the agent can rank outcomes in order of preference (most important, second most important, etc.). E.g., a robot must reach a goal location, but it prefers not using too much energy.

- Cardinal preference: the agent can assign a numerical value to each outcome. E.g., a robot must reach a goal location A, but it should go to another location B if traveling time to A is longer than a certain threshold.

- 8- Number of Agents:

- The number of agents dimension determines whether the agent is the only agent in the world or whether there are other agents.

- The other agents could be:

- Nature: non-purposive agents.

- Other agents: purposive agents.

- The other agents could be:

- Cooperative: they have the same goal.

- Competitive: they have conflicting goals.

- Neutral: they have no effect on the agent.

- With multiple agents, it is often optimal to act randomly because other agents can exploit deterministic strategies.

- The number of agents dimension considers whether the agent explicitly considers other agents:

- Single agent: the agent consider all other agents as part of nature; hence, they are non-purposive, and will not change their behavior based on the agent’s actions.

- Adversarial reasoning: the agent considers another agent ( only 1) and it is purposive and competitive. It is called two-player zero-sum games where one agent wins and the other loses.

- Multiple agents: the agent considers other agents as purposive and cooperative or competitive.

- 9- Interactivity:

- In deciding what an agent will do, there are three aspects of computation that must be distinguished:

- Design-time computation: computation done before the agent starts acting.

- Computation before action: computation done before each action.

- Computation during action: computation done while the agent is acting.

- The interactivity dimension considers whether the agent does:

- Offline reasoning: the agent does all its reasoning before it starts acting, and actions are usually precomputed and stored (e.g. actions table than can be used to look up actions).

- Online reasoning: the agent reasons before each action, aka, the agent observes the world, reasons, and then acts.

- In deciding what an agent will do, there are three aspects of computation that must be distinguished:

- 10- Interaction of the Dimensions:

- The simplest case for the robot is a flat system, represented in terms of states, with no uncertainty, with achievement goals, with no other agents, with given knowledge, and with perfect rationality.

- In this case, with an indefinite stage planning horizon, the problem of deciding what to do is reduced to the problem of finding a path in a graph of states.

- The simplest case for the robot is a flat system, represented in terms of states, with no uncertainty, with achievement goals, with no other agents, with given knowledge, and with perfect rationality.

| Dimension | Values |

|---|---|

| Modularity | Flat, Modular, Hierarchical. |

| Planning | Non-planning, Finite, Indefinite, Infinite stages. |

| Representation | Features, Propositions, Relations, Individuals. |

| Computational Limits | Perfect, Bounded rationality. |

| Learning | Given, Learned. |

| Sensing Uncertainty | Fully observable, Partially observable. |

| Effect Uncertainty | Deterministic, Stochastic. |

| Preferences | Goals, Ordinal, Cardinal. |

| Number of Agents | Single, Adversarial, Multiple. |

| Interactivity | Offline, Online. |

1.6 Designing Agents¶

- Artificial agents are designed for particular tasks.

- Researchers have not yet got to the stage of designing an intelligent agent for the task of surviving and reproducing in a complex natural environment.

- Two types of agents:

- Highly specialized agent that works well in the environment for which it was designed, but is helpless outside of this niche. E.g., Car painter robot that only paint a specific part of a specific car model.

- Flexible agent that can survive in arbitrary environments and accept new tasks at run time. This is not feasible yet, as humans are the only known agents that can do this.

- Two broad strategies have been pursued in building agents:

- Simplify the environment and build complex reasoning systems that work well in the simplified environment.

- E.g., a general-purpose factory robot, that can do many tasks in the factory environment.

- We started by simplifying the factory environment, and then built the robot.

- Build simple agents in natural environments.

- E.g., a robot insect that can survive in a natural environment.

- It is a simple agent that has one specific goal is to survive.

- Simplify the environment and build complex reasoning systems that work well in the simplified environment.

- Much AI reasoning involves searching through the space of possibilities to determine how to complete a task.

- In AI, knowledge is long-term representation of a domain whereas belief is about the immediate environment, for example where the agent is and where other object are.

- In philosophy, knowledge is usually defined as justified true belief, but in AI the term is used more generally to be any relatively stable information, as opposed to belief, which is more transitory information.

- The reason for this terminology is that it is difficult for an agent to determine truth, and “justified” is subjective.

Retrospect and Prospect 2¶

Unit 1: Lecture 1 3¶

- An agent acts intelligently if:

- Its actions are appropriate for its goals and its environment.

- It is flexible to changing environments and goals.

- It learns from experience.

- It makes appropriate choices given its computational and perceptual limitations.

Unit 1: Lecture 2 4¶

- Turing test:

- Human A interacts with two entities, B and C, one of which is a computer.

- Human A does not know which is which, and can only communicate with them via a teletype.

- If human A cannot tell which is the computer, then the computer is intelligent.

- The Chinese Room Argument:

- A person who does not speak Chinese is in a room with boxes of Chinese characters.

- The person has a book that tells him how to respond to each message.

- The person can respond to messages in Chinese, but he does not understand Chinese.

- The person is like a computer, which can process symbols but does not understand them.

- The test is a response to the Turing test, and it shows that a computer can pass the Turing test without understanding anything.

- Hence, intelligence is not just about processing symbols, but also about understanding them.

The incredible inventions of intuitive AI 5¶

References¶

-

Poole, D. L., & Mackworth, A. K. (2017). Artificial Intelligence: Foundations of computational agents. Cambridge University Press. Chapter 1 - Artificial Intelligence and Agents. https://artint.info/3e/html/ArtInt3e.Ch1.html ↩

-

Poole, D. L., & Mackworth, A. K. (2017). Artificial Intelligence: Foundations of computational agents. Cambridge University Press.Chapter 19 – Retrospect and Prospect. https://artint.info/3e/html/ArtInt3e.Ch19.html ↩

-

Taipala, D. (2014, September 2). CS 4408 Artificial Intelligence Unit 1 Lecture 1 [Video]. YouTube. ↩

-

Taipala, D. (2014, September 2). CS 4408 Artificial Intelligence Unit 1 Lecture 2 [Video]. YouTube. ↩

-

Conti, M. (2016, April). The incredible inventions of intuitive AI [Video]. TEDxPortland. https://www.ted.com/talks/maurice_conti_the_incredible_inventions_of_intuitive_ai ↩

-

Learning Guide Unit 1: Introduction | Home. (2025). Uopeople.edu. https://my.uopeople.edu/mod/book/view.php?id=454687&chapterid=555017 ↩