4. Scoring, Term Weighting, and the Vector Space Model¶

Introduction 1¶

Scoring, Term Weighting, and the Vector Space Model 2¶

6.1 Parametric and zone indexes¶

- Parametric and zone indexes functions:

- Allow index to retrieve documents by metadata such as language, author, date, etc.

- Simple scoring for retried documents.

- The metadata of a document contains fields and zones.

- Fields have limited possible values. E.g. language, author, date of creation, etc.

- zones values are free text. E.g. title, abstract, etc.

-

There is one parametric index per field/zone of metadata.

-

6.1.1 Weighted zone scoring:

- Weighted zone scoring is sometimes referred to as ranked Boolean retrieval.

- Accumulators: an array of scores, one per document.

- 6.1.2 Learning Weights:

- Machine-learned relevance may be used to compute weights.

6.2 Term frequency and weighting¶

| symbol | meaning |

|---|---|

| N | total number of documents in the collection |

| df(t) | document frequency of term t |

| tf(t, d) | term frequency of term t in document d |

| idf(t) | inverse document frequency of term t |

| cf(t) | collection frequency of term t |

- Bag of words:

- A document is represented as a bag of words.

- The order of the words is not important.

- The number of times a word appears in the document is important.

- Collection frequency: The number of occurrences of a term in the collection.

- Document frequency: The number of documents in the collection that contain the term.

- 6.2.1 Inverse document frequency:

- Computed as

idf(t) = log(N/df(t))whereNis the number of documents in the collection, anddf(t)is the document frequency of termt. - The

idfof rare terms is high.

- Computed as

- 6.2.2

tf-idfweighting:- Computed as

tf-idf(t, d) = tf(t, d) * idf(t). - Highest when

toccurs many times within a small number of documents. - Lowest when

toccurs in many documents; or occurs very few times in a few documents. - Lowest when

toccurs in all documents.

- Computed as

- Each document is a vector with one component per term.

- Overlap Score measure:

- Computed as

score(q, d) = sum(tf-idf(t, d)) for all t in q; wheretis a term in the queryq.

- Computed as

6.3 The vector space model for scoring¶

- Vector space model:

- Each document is a vector, thus, the collection is a set of document vectors.

- Each query is a vector.

- The score of a document is the cosine of the angle between the document vector and the query vector.

- 6.3.1 Dot products:

- Used to compute the similarity between two vectors (two documents, or document and query).

- Computed as

sim(d1, d2) = ( V(d1) . V(d2) ) / ( |V(d1)| |V(d2)| ); where:V(d)is the vector representation of documentd..indicates the Dot product between two vectors.|V(d)|is the Euclidean length of vectorV(d).

- Dot product:

- Computed as

V(d1) . V(d2) = sum(V(d1)[i] * V(d2)[i]) for all i. V(d1)[i]is theith component of vectorV(d1).

- Computed as

- Euclidean length:

- Computed as

|V(d)| = sqrt(sum(V(d)[i]^2) for all i).

- Computed as

- The dot product equals the cosine of the angle between the two vectors.

- Term document matrix:

- Each column is a document vector.

- Each row represents a term vector.

- Thus, the matrix joins document vectors and term vectors.

- 6.3.3 Computing vector scores

- We store the following with Each Posing entry:

docID: The document ID.termID: The term ID.tf-idf: Thetf-idfweight of the term in the document.tf: The term frequency of the term in the document.

- We store the following with Each Posing entry:

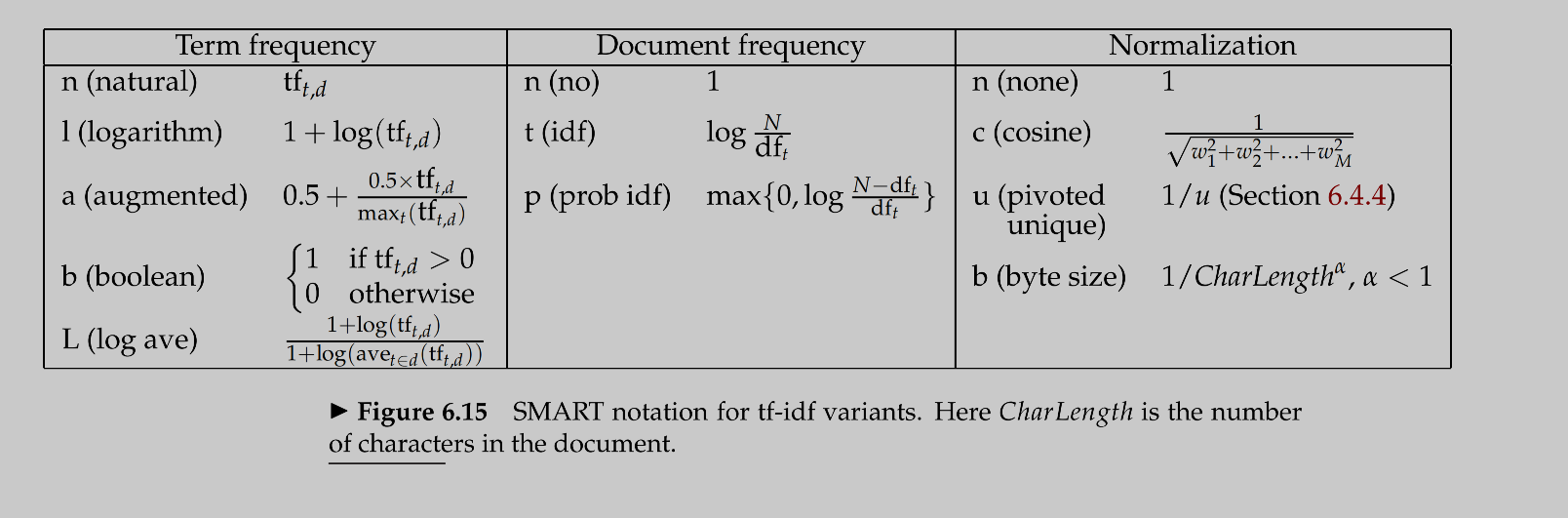

6.4 Variant tf-idf functions¶

- 6.4.1 Sublinear tf scaling:

- Uses the log of the term frequency instead of the term frequency.

- 6.4.2 Maximum

tfnormalization- Normalizes the term frequency by the maximum term frequency in the document.

- Computed as

tf(t, d) = 0.5 + 0.5 * tf(t, d) / max(tf(t', d) for all t').

- 6.4.3 Document and query weighting schemes:

SMARTnotation is used to describe the weighting schemes.

- 6.4.4 Pivoted normalized document length:

- Normalizes the document length by the average document length.

References¶

-

UoPeople (2020) CS3308: Information Retrieval. Unit 4: Introduction & Video lectures. https://my.uopeople.edu/course/view.php?id=7253 ↩

-

Manning, C.D., Raghaven, P., & Schütze, H. (2009). An Introduction to Information Retrieval (Online ed.). Cambridge, MA: Cambridge University Press. https://nlp.stanford.edu/IR-book/pdf/05comp.pdf. Chapter 6: Scoring, Term Weighting, and the Vector Space Model. ↩

-

Oresoft LWC. (2011). WDM 57: Ranked Retrieval Model [YouTube Video]. In YouTube. https://www.youtube.com/watch?v=CTwqDj5gtLo&list=PL0174E49C0E0DD5C8&index=57 ↩