1. Introduction to IR, Boolean Retrieval, and Terms and Postings¶

- The field of information retrieval (IR) is concerned with the structure, analysis, organization, storage, searching, and retrieval of information.

- IR started with scientific publications and library records, but then moved to other professions such as journalism, law, and medicine, and now most recently to the web.

Boolean Retrieval 1¶

- Information retrieval (IR) is finding material (usually documents) of an unstructured nature (usually text) that satisfies an information need from within large collections (usually stored on computers).

- IR is overtaking the traditional DB-style retrieval of structured data, where Keys (primary, foreign, etc) are required to retrieve data.

- Unstructured data is a broad term as the opposite of structured relational canonical data. It means data that do not have semantic structure that is easy for a computer to understand.

- There are no data that are 100% unstructured.

- IR functions:

- Retrieve unstructured or semi-structured data, usually text.

- Clustering data into groups of similar items.

- Types of search (according to scale from large to small):

- Web search: search the web. Very large scale that requires distributed systems and handles billions of documents and queries.

- Enterprise search: search within an organization. Usually domain-specific where data is usually structured and contained within centralized databases with people working on maintaining the data structure.

- Personal search: Desktop search (Spotlight, Windows Instant Search, email search, etc). Unstructured with different file formats and data types.

- Grepping:

- Is a linear scan through the entire document(s) to find matches.

- Named after the unix

grepcommand. - Allows for regular expressions and wildcards searching.

- Very effective for small collections.

- It lacks:

- Scalability: it is not feasible to grep a large collection.

- Flexible matching: E.g.

grep Car NEAR Crash, where NEAR is a proximity operator that may mean within 5 words or within the same sentence. - Ranked Retrieval: Grep does not rank the results and only returns the exact matches.

- Index: Pre-computed data structure that allows for fast searching and avoiding the linear scan.

- Incidence matrix: A matrix that has a row for each document and a column for each term. The value of each cell is 1 if the term is present in the document, and 0 otherwise.

- Term: A word or a phrase that is used in the index or the query.

- Boolean retrieval model: A retrieval model that allows for queries that are Boolean expressions of terms, where the Boolean operators are AND, OR, and NOT.

- Document is a set of terms, that is chosen by the retriever on things like chapters, pages, topics, etc.

- Document collection is a set of documents that represents the entire data that is being searched. sometimes called a corpus.

- Information need: The information that the user is looking for (the topic).

- Query: The user’s request for information (the search terms).

- Relevance: The degree to which a document meets the user’s information need.

- Effectiveness: The quality of the results returned by the IR system.

- Precision: The fraction of retrieved documents that are relevant to the query (

relevant retrieved / retrieved). - Recall: The fraction of relevant documents that are retrieved by the query (

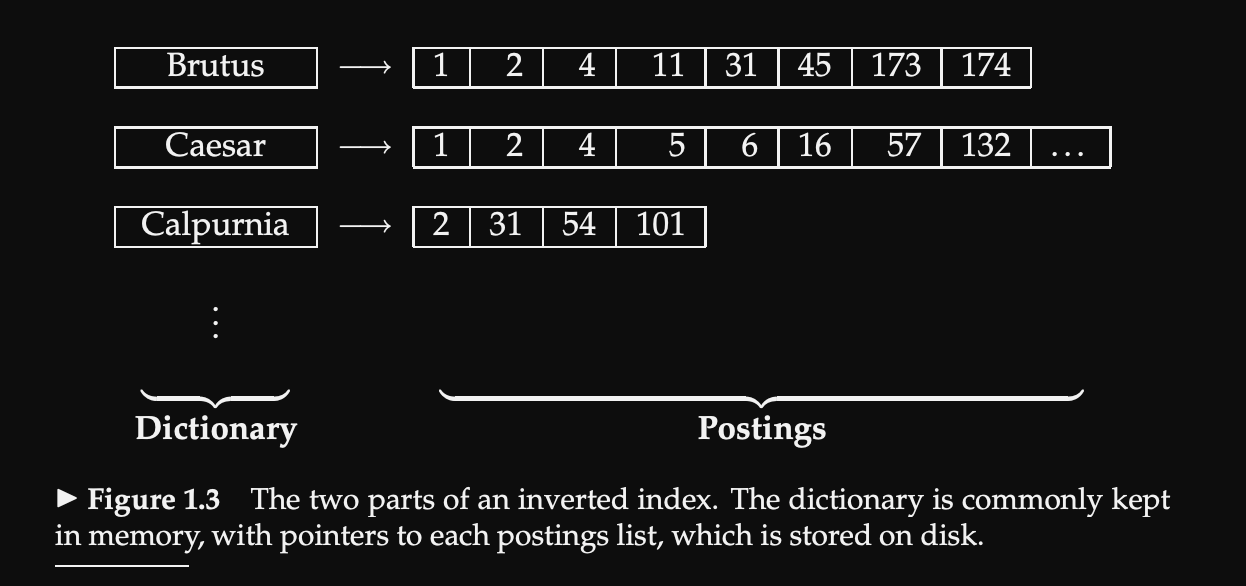

relevant retrieved / relevant total(not retrieved yet due to pagination for example)). - Inverted index: A data structure that tracks which documents contain which terms and ignores the documents that do not contain the term; thus the size of the generated index is much smaller than the forward index (term-document incidence matrix).

- Vocabulary: The set all terms in the entire collection, also called Lexicon.

- Postings List:

- The list of documents that contain a term (in the inverted index of terms and the documents they belong to).

- Posting is a document identifier (docID) and some additional information about the term occurrence in the document (e.g. term frequency, position, etc).

- Each occurrence of the term is stored as a posting and the list of all posting lists is called the postings for all terms in the vocabulary.

- Document frequency (df): The number of documents that contain each term. It is an important statistic for the model performance and response time.

- Ranked Retrieval models:

- Contrasts with Boolean retrieval models.

- E.g. Vector Space Model.

- Uses free text queries (unstructured queries) instead of the precise language of Boolean queries.

- Proximity Operator: An operator that allows for searching for terms that are close to each other. E.g.

Car NEAR Crashwhere NEAR is a proximity operator that may mean within 5 words or within the same sentence. - Using And operator => High precision, low recall.

- Using Or operator => Low precision, high recall.

Inverted Index¶

- Inverted index: A data structure that tracks which documents contain which terms and ignores the documents that do not contain the term; thus the size of the generated index is much smaller than the forward index (term-document incidence matrix).

- Steps to build inverted index:

- Collect the documents to be indexed.

- Tokenize the text of the documents into tokens (terms).

- Do linguistic preprocessing to the tokens (e.g. stemming, lemmatization, etc) to reduce the number of terms and generate normalized terms.

- Index the normalized terms with pointers to the documents that contain them.

- Usually, The dictionary is stored in memory and the postings are stored on disk.

- Data structures used for holding the postings:

- Linked Lists: cheap insertion (common in crawling where the postings are edited frequently).

- Skip Lists.

- Fixed-size arrays: waste of space.

- Dynamic arrays (variable-size arrays): no overhead for pointers. Fast access due to locality of reference.

- Hybrid Schema: Linked lists of fixed-size arrays.

Terms and Postings 2¶

- Tokenization: is the process of chopping up character streams into tokens.

- Linguistic preprocessing: is the process applies on the tokens to generate a set of terms that are indexed.

2.1 Document delineation and character sequence decoding¶

- 2.1.1 Obtaining the character sequence:

- Documents are bytes in a file or web server.

- First step is to convert these bytes into character sequences, that is, English text in ASCII encoding.

- The bytes stored in the file are encoding using a character encoding scheme (e.g. ASCII, UTF-8, etc).

- To get the character sequence, the bytes are decoded according to the encoding scheme (stored in the file metadata).

- Some files, then may be further decoded to get the final character sequence (e.g. PDF, html, xml files, etc).

- Encoding/decoding some languages are more complex than others (e.g. Arabic, Chinese, etc).

- 2.1.2 Choosing a document unit:

- The document unit is the unit that is indexed, one file? one paragraph? one sentence? one word? one section in a book? etc.

- Indexing granularity: The size of the document unit. large granularity => small document unit. small granularity => large document unit.

- Usually IR systems store multiple indexes with different document units and granularity, and then routes to the right index based on the query (e.g. For a book, can be indexed by chapter, or page, etc).

2.2 Determining the vocabulary of terms¶

- 2.2.1 Tokenization:

- The process of chopping up character streams into tokens (terms or words).

- Token: A sequence of characters that form a unit of information (e.g. a word, a phrase, a number, etc).

- Type: A class of tokens that are considered equivalent for indexing purposes (e.g.

carandcarsare different tokens but are the same type). - Term: A normalized type that is included in an IR system’s index.

- The sentence:

to sleep perchance to dreamHas:- 5 tokens:

to,sleep,perchance,to,dream. - 4 types:

to,sleep,perchance,dream(tois repeated, thus, considered only once). - 3 terms:

perchance,sleep,dream(tois ignored as a stop word).

- 5 tokens:

- Using the same tokenizer that used when building the index to process to tokenize quires against that index may result in better results.

- Language identification: The process of determining the language of the document to use the right tokenizer.

- Hyphens: hyphens are complex (e.g.

lowercase,lower case,lower-case, should ), and models can not handle all cases. - Compound names:, in some languages, compound names are written as one word (e.g. German:

ComputerlingustikforComputer linguistic), and in others, they are written as separate words or hyphens (e.g.New York,New-York,NewYork,NewYorkCity, etc). - Compound splitter: A tool that splits compound names correctly into correct tokens.

- Word segmentation: The process of splitting a sentence into words (e.g. Chinese, Japanese, etc), as some languages do not have spaces between words.

- 2.2.2 Dropping common terms: stop words:

- List of stop words: a, an, and, are, as, at, be, by, for, from, has, he, in, is, it, its, of, on, that, the, to, was, were, will, with.

- Collection frequency: The number of times a term occurs in the entire collection.

- Stop words are usually the most frequent terms in the collection, and they are usually dropped from the index.

- Stop words are not dropped for phrase queries and phrase indexing.

- So for most modern IR systems, the additional cost of including stop words is not that big – neither in terms of index size nor in terms of query processing time.

- 2.2.3 Normalization (equivalence classing of terms):

- Token Normalization:

- is the process of canonicalizing tokens so that matches occur despite superficial differences in the character sequences of the tokens.

- generate more possible types for the same token, these types may be used by users: e.g. type

USA, we may generate another typeU.S.Athat means the same thing.

- Equivalence classes:

- A set of terms that are considered equivalent for the purposes of information retrieval.

- The list can be extended to include list of synonyms (e.g.

carandautomobile), acronyms (e.g.USAandU.S.A), hyphened words (e.g.New-York,New York,NewYork), etc. - The list can be extended to use phonetic equivalence (e.g.

carandkar), or spelling correction (e.g.carandcaar). The Soundex algorithm is used for phonetic equivalence.

- Accents and Diacritics:

- In English, accents are usually removed during normalization (e.g.

caféandcafeare considered the same). - In other languages, accents can not be ignored, however, most users do not use accents when searching; thus, the system should be able to handle both cases.

- In English, accents are usually removed during normalization (e.g.

- Capitalization/ case folding:

- Case folding: The process of converting all characters to lowercase.

- In English, case folding is done on some words, while other words is kept as is (so,

CATas abbreviation forComputer Aided Translationdiffers fromcatas an animal) or (Blackas a name differs fromblackas a color). - All uppercase words at the beginning of a sentence are usually converted to lowercase (e.g.

TheinThe cat is black) while any uppercase word in the middle of a sentence is kept as is (e.g.BlackinThe cat owner was Black). - True casing: using a machine learning model to decide which words to be case folded and which to be kept as is.

- Stemming and lemmatization:

- The goal of both stemming and lemmatization is to reduce inflectional forms and sometimes derivationally related forms of a word to a common base form. e.g.

am, are, is=>be.car, cars, car's, cars'=>car. - Stemming: A crude heuristic process that chops off the ends of words in the hope of achieving this goal correctly most of the time, and often includes the removal of derivational affixes. E.g. (

improve, improving, improved, improving, improver) =>improv(the stem, includes all letters commons in all forms of the word, but it does not have to be a grammatically valid word). - Lemmatization: A more sophisticated process that uses a vocabulary and morphological analysis of words, normally aiming to remove inflectional endings only and to return the base or dictionary form of a word, which is known as the lemma. E.g. (

am, are, is) =>be(the lemma, a grammatically valid word). - E.g.

see, saw, seen, seeing=> Stemming:s(only shared letters), Lemmatization:see(according to dictionary). - Porter Stemmer:

- A popular stemming algorithm that is used in many IR systems.

- 5 phases of word reduction, each phase consists of a set of rules that are applied sequentially to the word.

- Other stemming algorithms: Lovins Stemmer (one pass), Paice/Husk Stemmer.

- Stemming increases recall while harming precision.

- Both stemming and lemmatization do not improve retrieval performance, and may harm it.

- The goal of both stemming and lemmatization is to reduce inflectional forms and sometimes derivationally related forms of a word to a common base form. e.g.

- Token Normalization:

2.3 Faster postings list intersection via skip pointer¶

- Postings list: in the inverted index of terms and the documents they belong to, that is, a hashmap of linked lists of Postings.

HashMap<term, LinkedList<Posting>>. - When a query with multiple terms is submitted, the system needs to find the documents that contain all the terms in the query in

O(n1 + n2, ... + nk)wheren1, n2, ..., nkis the length of the PostingList for term 1, 2, …, k respectively, as we need to linearly scan all the PostingLists to find the documents that contain all the terms. - Skip pointers:

- A pointer within the

LinkedList<Posting>that points to points to another Posting in the list that iskpositions away, wherekis a constant, and it is guaranteed that there is no search results between the current Posting and the Posting pointed to by the skip pointer. - It is useful as we do not need to traverse (scan) every item in the PostingList, but we can skip some items.

- A pointer within the

- Where to place skip pointers?:

- More skips means shorter skip spans, and that we are more likely to skip. But it also means lots of comparisons to skip pointers, and lots of space storing skip pointers.

- Fewer skips means few pointer comparisons, but then long skip spans which means that there will be fewer opportunities to skip.

- The optimal number is to use \(\sqrt{n}\) skip pointers, where

nis the length of the PostingList. - Evenly spaced skip pointers are better than randomly spaced ones or ones that depend on the distribution of the terms in the PostingList.

- Quick updates to the PostingList renders the skip pointers useless, as they need to be updated as well.

2.4 Positional and Phrase Queries¶

- Phrase Query: A query that consists of multiple terms that appear in the same order in the document, or a literal search query, that is, the occurrence of all terms in the query in a different order is not considered a match. E.g.

Car Crashis a phrase query that matchesCar Crashbut notCrash Car. - Answering phrase queries requires more than just a simple inverted index with simple postings lists.

- Both

biword indexandpositional indexare extensions to the inverted index that allow for answering phrase queries without harming the main functionality of the inverted index to answer Boolean queries, and with good precision, recall, and response time. - Lateral phrase queries are usually surrounded by quotation marks (“”), but a good percentage of user queries are implicitly phrase queries, that is, the quotes are omitted, but the query is a legitimate phrase query; thus, the system need to handle both explicit and implicit phrase queries.

Biword Index¶

- Considers every two consecutive words in the document as a phrase.

- Example, the query

Car Crashed Intowill be converted toCar CrashedandCrashed Intoand the results of both queries will be combined. - There are some possibilities of false positive, that is, the response will contain irrelevant documents, but users usually understand that and will refine their query.

- The query terms are tokenized and tagged with their position in the document, then some parts of the query are removed to generate the biword queries (e.g.

and,of the, etc) with special focus on nouns, proper nouns and function words. - A

Phrase indexis generated that maps each biword to the documents that contains it, sometimes the concept is generated ton-word indexwhere n is the number of words in the phrase, but it is usually limited to 2 or 3 words.

2.4.2 Positional indexes¶

- It is a better solution than biword indexes.

- The PostingList for each term is extended to include the position(s) of the term in the document.

- When issuing a phrase query, the system finds the documents that contain all the terms in the query, then it checks if the terms appear in the same order in the document (that is,

term1.position + 1 = term2.position), and if so, the document is considered a match to the phrase query. - Example: searching for

Car Crash, will still search forCarandCrashseparately, but then the positions returned are considered, so ifCarappears in position 1 andCrashappears in position 2, then the document is considered a match, otherwise, it is not. - The same general method is applied for within k word proximity searches: E.g

employee /3 place, where/3means within 3 words, so the system will search foremployeeandplaceseparately, then check if they appear within 3 words of each other (on either side). - The asymptotic complexity of positional index intersection operation is now

O(T)where T is total number of tokens in the collection (as opposed toO(n1 + n2, ... + nk)for the simple inverted index). - Next Word Index: A positional index that is used to speed up the phrase query search by indexing the next word after each occurrence of a term in the document. E.g.

Car=>Crash,Crash=>Into, etc.

References¶

-

Manning, C.D., Raghaven, P., & Schütze, H. (2009). An Introduction to Information Retrieval (Online ed.). Cambridge, MA: Cambridge University Press. Available at https://nlp.stanford.edu/IR-book/pdf/01bool.pdf Chapter 1: Boolean Retrieval. ↩

-

Manning, C.D., Raghaven, P., & Schütze, H. (2009). An Introduction to Information Retrieval (Online ed.). Cambridge, MA: Cambridge University Press. Available at https://nlp.stanford.edu/IR-book/pdf/02voc.pdf Chapter 2: Terms and Postings. ↩