1. Introduction to Graphics¶

Introduction 1¶

- We need to recognize the role of graphics APIs and their interaction with Graphics hardware.

- Graphics processing is numerically intense.

- Graphics geometry is based upon the manipulation of points and polygons.

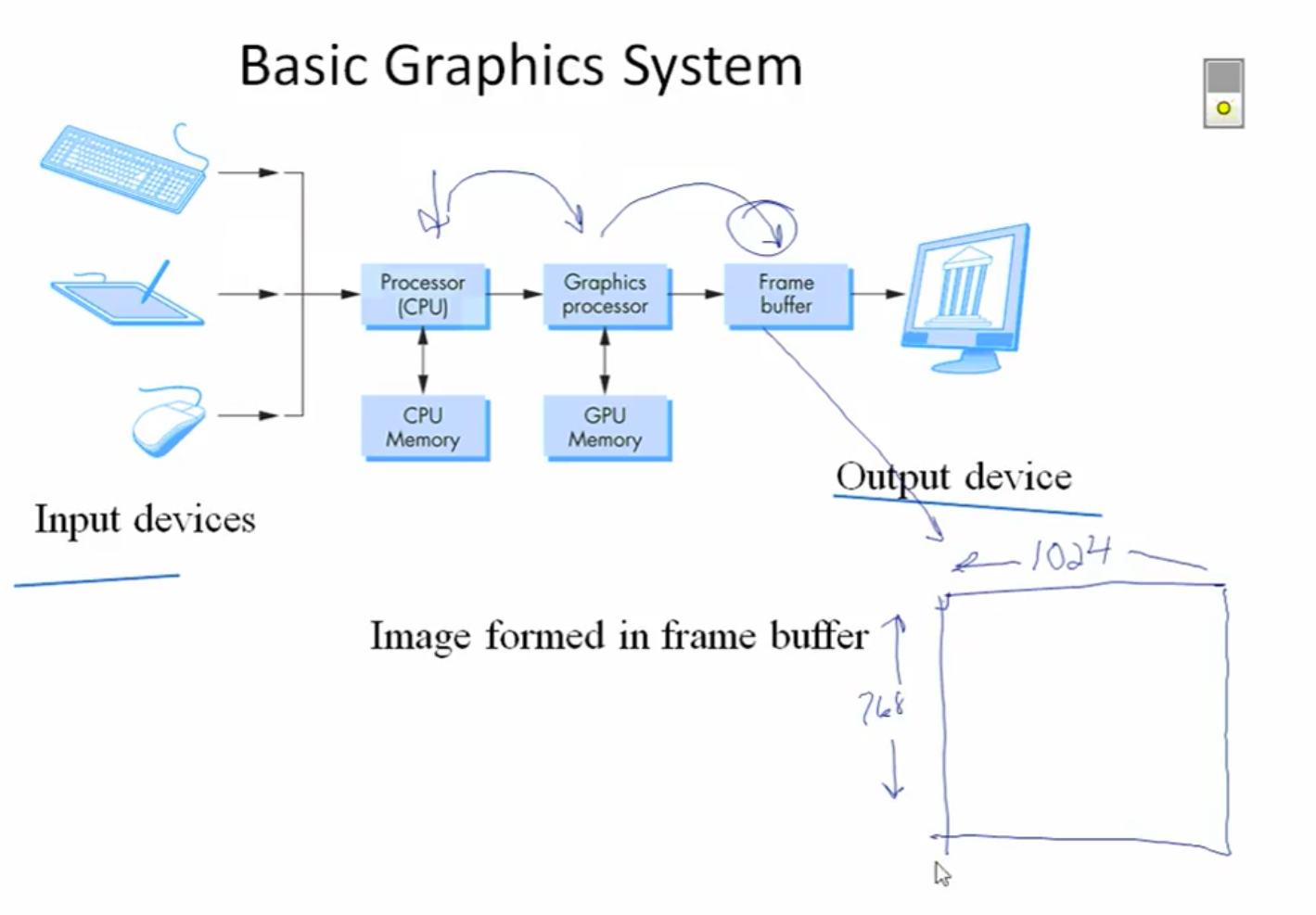

- Rendering requires expensive processing. So, computer architectures have been developed with specialized processing capabilities that allow this processing to be offloaded from the computer’s CPU to be processed by this specialized GPU (Graphics Processing Unit) or VPU (Visual Processing Unit).

- OpenGL is a cross-platform API for rendering 2D and 3D graphics. It is a low-level API that provides a set of functions that allow the programmer to interact with the GPU.

- WebGL is a JavaScript API that is a subset of OpenGL that works in the browser.

- Clipping is the process of limiting the visible world to what the viewing surface (window) can display.

- Frustum culling is the process of removing objects that are outside the viewing frustum.

- Objects in the modeled world will appear smaller the higher the camera is.

Chapter 1: Introduction to Computer Graphics 2¶

1.1 Painting and Drawing¶

- RGB scale:

- 24-bit color = 3 * 8-bit color = RGB (red, green, blue).

-

- Most screens these days use 24-bit color, where a color can be specified by three 8-bit numbers, giving the levels of red, green, and blue in the color.

- Gray scale: each pixel is represented by a single number, the intensity of the gray on a back to white scale. 256 levels of gray are possible.

- Indexed Colors:

- Used in old screens, where only a limited number of colors can be displayed, usually 16 or 256.

- The color is represented by one number, which is an index into a table of colors.

- Frame Buffer:

- A region of memory that contains color data for a digital image. Most often refers to the memory containing the image that appears on the computer’s screen.

- Every image on the screen has its own frame buffer loaded in memory with the color data for that image.

- The screen is redrawn multiple times per second, and each time it is redrawn, the color data in the frame buffer is used to determine the color of each pixel on the screen.

- Raster Graphics:

- Images are stored as a grid of pixels.

- Pixel-based graphics in which an image is specified by assigning a color to each pixel in a grid of pixels.

- The term “raster” technically refers to the mechanism used on older vacuum tube computer monitors: An electron beam would move along the rows of pixels, making them glow.

- Modern displays are still called raster displays because of pixels grid, but they use different technology that does not involve an electron beam.

- Used in Paint programs like Adobe Photoshop, GIMP, and Paint.NET.

- File formats: GIF, PNG, JPEG, and WebP are common raster formats:

- GIF and PNG are lossless, JPEG and WebP are lossy.

- GIF -> PNG -> JPEG -> WebP in terms of modernity and features.

- GIF is an indexed 256-color format, and it supports simple animations.

- PNG can be indexed or 24-bit color.

- JPEG is meant for full-color photographs, but not as well for images that have sharp edges between different colors as the lossy compression can cause artifacts.

- The amount of data necessary to represent a raster image can be quite large. However, the data usually contains a lot of redundancy, and the data can be “compressed” to reduce its size.

- Vector Graphics:

- Images are stored as a set of geometric shapes with each shape having attributes that control thickness, color, and fill.

- This does not work for all images as not all images can be represented as a set of geometric shapes (e.g., photographs of sunset).

- It is more useful for architectural blueprints and scientific illustrations.

- Display list is the set of geometric shapes that are to be drawn.

- If the display list became too long, the image would start to flicker because a line would have a chance to visibly fade before its next turn to be redrawn.

- The amount of information needed to represent the image is much smaller using a vector representation than using a raster representation.

- Vector graphics use less memory and storage to represent an image than raster graphics, hence they are drawn faster.

- Used in Drawing programs like Adobe Illustrator, Corel Draw, and Inkscape.

- File formats: SVG (Scalable Vector Graphics) is a common vector format.

- SVG images can include raster images as shapes, but the SVG file itself is a vector file.

- It is an XML-based format to represent a two-dimensional vector graphic images.

- images can be scaled or zoomed without losing quality, as opposed to raster images.

- Drawing + Painting Programs:

- A practical program for image creation and editing might combine elements of painting and drawing, although one or the other is usually dominant.

- For example, a drawing program might allow the user to include a raster-type image, treating it as one shape.

- A painting program might let the user create “layers,” which are separate images that can be layered one on top of another to create the final image. The layers can then be manipulated much like the shapes in a drawing program.

- Coordinate System:

- It sets up a correspondence between the numbers and geometric points.

- Each point in the two-dimensional plane is represented by a pair of numbers, (x, y), where x is the horizontal coordinate and y is the vertical coordinate.

- Raster graphics use integer coordinates, while vector graphics use floating-point coordinates.

1.2 Elements of 3D Graphics¶

- 3D graphics have more in common with vector graphics than with raster graphics.

- Geometric Modeling:

- It is a way to represent a scene by by specifying the geometric objects contained in the scene, together with geometric transforms to be applied to them and attributes that determine their appearance.

- The starting point is to construct an “artificial 3D world” as a collection of simple geometric shapes, arranged in three-dimensional space.

- Projection:

- It is the process of converting a 3D scene into a 2D image.

- It is the equivalent of taking a photograph of the scene.

- It is the transformation that maps coordinates in 3D to coordinates in 2D.

- Viewing:

- Setting the position and orientation of the viewer in a 3D world, which determine what will be visible when the 2D image of a 3D world is rendered.

- The viewer is usually represented by a camera, which has a position and orientation in the 3D world.

- Once the view is set up, the world as seen from that point of view can be projected into 2D.

- Projection is analogous to taking a picture with the camera.

- Geometric Primitive:

- Geometric objects in a graphics system, such as OpenGL, that are NOT made up of simpler objects.

- Examples in OpenGL include points, lines, and triangles, but the set of available primitives depends on the graphics system.

- Different graphics systems make different sets of primitives available, but in many cases only very basic shapes such as lines and triangles are considered primitive.

- Hierarchical Modeling:

- Creating complex geometric models in a hierarchical fashion, starting with geometric primitives, combining them into components that can then be further combined into more complex components, and so on.

- The created components can be reused in other places in the same scene or in other scenes.

- Geometric Transformations:

- It is a coordinate transformation; that is, a function that can be applied to each of the points in a geometric object to produce a new object.

- A geometric transform is used to adjust the size, orientation, and position of a geometric object.

- Common transforms include scaling, rotation, and translation.

- Scaling:

- It multiplies each coordinate of a point by a number called the scaling factor.

- Scaling increases or decreases the size of an object, but also moves its points closer to or farther from the origin.

- Scaling can be uniform, the same in every direction or non-uniform, with a different scaling factor in each coordinate direction.

- A negative scaling factor can be used to apply a reflection.

- Rotation:

- It is a geometric transform that rotates each point by a specified angle about some point (in 2D) or axis (in 3D).

- It is used to set an object’s orientation, by rotating it by some angle about some specific axis.

- Translation:

- It is a geometric transform that adds a given translation amount to each coordinate of a point.

- Translation is used to move objects without changing their size or orientation.

- Material:

- It refers to the properties that determine the intrinsic visual appearance of a surface, especially how it interacts with light.

- It includes the properties of an object that determine how that object interacts with light in the environment.

- Material properties in OpenGL include, for example, diffuse color, specular color, and shininess.

- Material properties can include a basic color as well as other properties such as shininess, roughness, and transparency.

- Texture:

- It is the variation in some property from point-to-point on an object.

- The most common type is image texture. When an image texture is applied to a surface, the surface color varies from point to point.

- The most common use of texture is to allow different colors for different points.

- Textures allow us to add detail to a scene without using a huge number of geometric primitives; instead, you can use a smaller number of textured primitives.

- Lighting:

- Using light sources in a 3D scene, so that the appearance of objects in the scene can be computed based on the interaction of light with the objects’ material properties.

- There can be several sources of light in a scene. Each light source can have its own color, intensity, and direction or position.

- The light from those sources will then interact with the material properties of the objects in the scene.

- Rasterization:

- It is the process of creating a raster image, that is one made of pixels, from other data that specifies the content of the image.

- For example, a vector graphics image must be rasterized in order to be displayed on a computer screen.

- Rendering:

- The process of producing a 2D image from a 3D scene description.

- It involves projecting the 3D scene into a 2D image, then determining the color of each pixel in the image based on the scene’s lighting and material properties, and finally rasterizing the image.

- Animation:

- A sequence of images that, when displayed quickly one after the other, will produce the impression of continuous motion or change.

- The term animation also refers to the process of creating such image sequences.

- Almost any aspect of a scene can change during an animation, including coordinates of primitives, transformations, material properties, and the view.

Chapter 5: Three.js; A 3-D Scene Graph API 2¶

- OpenGL:

- A family of computer graphics APIs that is implemented in many graphics hardware devices.

- There are several versions of the API, and there are implementations, or “bindings” for several different programming languages.

- Versions of OpenGL for embedded systems such as mobile phones are known as OpenGL ES.

- WebGL is a version for use on Web pages.

- OpenGL can be used for 2D as well as for 3D graphics, but it is most commonly associated with 3D.

- WebGL:

- A 3D graphics API for use on web pages. WebGL programs are written in the JavaScript programming language and display their images in HTML canvas elements.

- WebGL is based on OpenGL ES, the version of OpenGL for embedded systems, with a few changes to adapt it to the JavaScript language and the Web environment.

- WebGL is a low level language, even more so than OpenGL 1.1, since a WebGL program has to handle a lot of the low-level implementation details that were handled internally in the original version of OpenGL.

- This makes WebGL much more flexible, but more difficult to use.

- Three.js is a higher-level API for 3D web graphics that is built on top of WegGL.

- WebGPU:

- A new JavaScript graphics API, similar to WebGL, but designed to let web programs access modern GPU capabilities such as compute shaders.

5.1 Three.js Basics¶

- Types of Projections:

- Orthographic projection:

- A projection from 3D to 2D that simply discards the z-coordinate.

- It projects objects along lines that are orthogonal (perpendicular) to the xy-plane.

- In OpenGL 1.1, the view volume for an orthographic projection is a rectangular solid.

- Perspective projection:

- A projection from 3D to 2D that projects objects along lines radiating out from a viewpoint.

- A perspective projection attempts to simulate realistic viewing.

- A perspective projection preserves perspective; that is, objects that are farther from the viewpoint are smaller in the projection.

- In OpenGL 1.1, the view volume for a perspective projection is a frustum, or truncated pyramid.

- Orthographic projection:

- Eye Coordinates:

- The coordinate system on 3D space defined by the viewer.

- In eye coordinates in OpenGL 1.1, the viewer is located at the origin, looking in the direction of the negative z-axis, with the positive y-axis pointing upwards, and the positive x-axis pointing to the right.

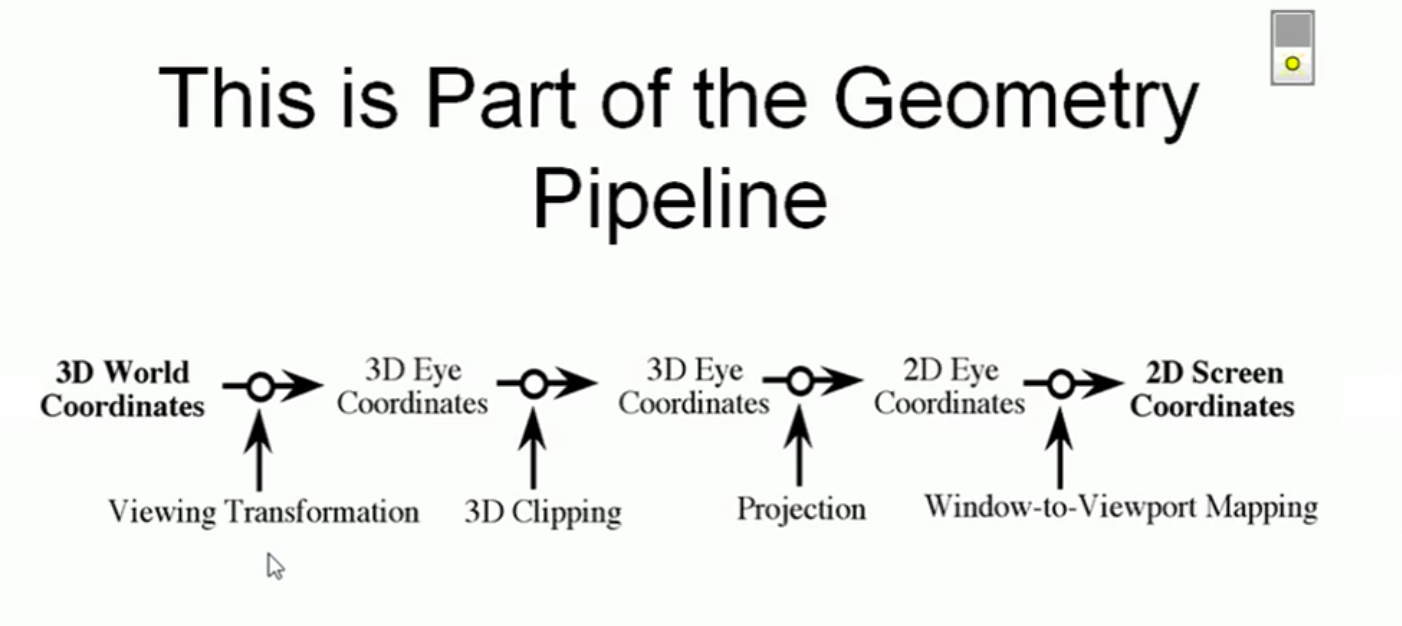

- The modelview transformation maps objects into the eye coordinate system, and the projection transform maps eye coordinates to clip coordinates.

- objects coordinates -> modelview transform -> eye coordinates -> projection transform -> clip coordinates -> normalized device coordinates -> viewport transform -> window coordinates.

- AntiAliasing:

- A technique used to reduce the jagged or “staircase” appearance of diagonal lines, text, and other shapes that are drawn using pixels.

- When a pixel is only partly covered by a geometric shape, then the color of the pixel is a blend of the color of the shape and the color of the background, with the degree of blending depending on the fraction of the pixel that is covered by the geometric shape.

- Primitive Shapes in Three.js:

- Points.

- Mesh: for triangles.

- Line.

- LineSegments.

- LineLoop.

- A visible object is made up of some geometry plus a material that determines the appearance of that geometry.

- Vertex:

- One of the points that define a geometric primitive, such as the two endpoints of a line segment or the three vertices of a triangle.

- The plural is “vertices”.

- A vertex can be specified in a coordinate system by giving its x and y coordinates in 2D graphics, or its x, y, and z coordinates in 3D graphics.

- Alternatives to Three: Babylon.js, A-Frame, and PlayCanvas.

- About JavaScript Modules:

- Use the

importstatement to import a module. - Use the

importmapattribute in the script tag to specify the location of the module to be imported.

- Use the

<script type="importmap">

{

"imports": {

"three": "./script/three.module.min.js",

"addons/": "./script/"

}

}

</script>

<script type="module">

import * as THREE from "three";

import { OrbitControls } from "addons/controls/OrbitControls.js";

import { GLTFLoader } from "addons/loaders/GLTFLoader.js";

</script>

- Three.js works with the HTML canvas element.

- Three.js is an object-oriented scene graph API.

- The basic procedure is to:

- Build a scene graph out of Three.js objects.

- Render an image of the scene it represents.

- Animation can be implemented by modifying properties of the scene graph between frames.

- Three.js Classes:

Scene: The root of the scene graph.Camera: The viewpoint from which the scene is viewed.Renderer: The object that renders the scene to the canvas;WebGLRendereris the most common renderer.Mesh: A geometric object that can be rendered.Material: The appearance of a mesh.Light: A source of light in the scene.Geometry: The shape of a mesh.Texture: The appearance of a mesh.Object3D: The base class for all objects in the scene graph.

- Three.Scene:

- The root of the scene graph.

- A Scene object is a holder for all the objects that make up a 3D world, including lights, graphical objects, and possibly cameras.

scene.add(object)andscene.remove(object)are used to add and remove objects from the scene.

- Three.Camera:

- The viewpoint from which the scene is viewed.

- It represents a combination of a viewing transformation and a projection.

- There are 2 types of cameras:

OrthographicCameraandPerspectiveCamera. - The near and far parameters give the z-limits in terms of distance from the camera.

- Three.Renderer:

- The object that renders the scene to the canvas; the most common renderer is

WebGLRenderer. - It creates an image from a scene graph.

- The object that renders the scene to the canvas; the most common renderer is

- Three.Vector3:

- A class that represents a 3D vector.

- It is used to represent points in 3D space.

- It has methods for adding, subtracting, and scaling vectors and other vector operations such as dot and cross products.

v3.x,v3.y,v3.z: The x, y, and z coordinates of the object’s position.v3.set(x, y, z)to set the position.

- Three.Euler:

- A class that represents a rotation in terms of three angles (in radians) about the x, y, and z axes.

- It is used to represent the rotation of an object.

euler.x,euler.y,euler.zoreuler.set(x,y,z): The angles of rotation about the x, y, and z axes.- The cumulative effect of rotations about the three coordinate axes depends on the order in which the rotations are applied.

- Three.Object3D:

- The base class for all objects in the scene graph.

- It is a container for other objects, and has a position, rotation, and scale.

- All objects, including Cameras, Lights, Scenes, and Meshes, are derived from Object3D (subclass or extension of Object3D).

- Methods include:

obj.add(object)andobj.remove(object)to add and remove children.obj.clone()to make a copy of the object.

- Properties include:

obj.children: List of children.obj.parent: The parent object (another Object3D), or null for the root of the scene graph.obj.scale,obj.position,obj.rotation: Defines the transformation of the object; the object is scaled first, then rotated, then translated.- Each of these properties is a Vector3 instance and can be updated as

obj.scale.set(x, y, z)orobj.rotation.x = x. - Default scale is

obj.scale = new THREE.Vector3(1, 1, 1). - Default position is

obj.position = new THREE.Vector3(0, 0, 0). - Default rotation is

obj.rotation = new THREE.Euler(0, 0, 0).

- Each of these properties is a Vector3 instance and can be updated as

- Three.Points:

- Three.Mesh:

- Three.js comes with classes to represent common mesh geometries, such as a sphere, a cylinder, and a torus.

- Classes like

BoxGeometry,PlaneGeometry,SphereGeometry,CylinderGeometry, andTorusGeometryare available.

- Three.Line:

- Three.LineSegments:

- Three.LineLoop:

- Three.BufferGeometry:

- Includes the vertices of the shape along with their attributes as an array of numbers TypedArray.

Float32Array: an array that can only contain 32-bit floating-point numbers.UInt16Array: an array that can only contain 16-bit unsigned integers.- The typed array must be wrapped in a Three.BufferAttribute object.

- Includes the vertices of the shape along with their attributes as an array of numbers TypedArray.

let vertexCoords = new Float32Array([0, 0, 0, 1, 0, 0, 0, 1, 0]);

let vertexAttrib = new THREE.BufferAttribute(vertexCoords, 3);

let geometry = new THREE.BufferGeometry();

geometry.setAttribute("position", vertexAttrib);

- Three.Material:

- It can specify the color and the size of the points, among other properties.

- It is used to extend materials for other primitives, such as

LineBasicMaterialandPointsMaterial.

- Three.Color:

- It is an a RGB color, that has

c.r,c.g, andc.bproperties with values between 0 and 1. - It can be created with

new THREE.Color(0xff0000)ornew THREE.Color("red")ornew THREE.Color(1, 0, 0)ornew THREE.Color('rgb(255,0,0)').

- It is an a RGB color, that has

- Lights classes extend the

Three.Object3Dclass. - Types of Lights:

- Directional lights:

- A light source whose light rays are parallel, all arriving from the same direction.

- Can be considered to be a light source at an effectively infinite distance.

- Also called a “sun,” since the Sun is an example of a directional light source.

- Point lights:

- A light source whose light rays emanate from a single point.

- Also called a “lamp,” since a lamp approximates a point source of light.

- Also called a positional light.

- Ambient lights:

- Directionless light that exists in an environment but does not seem to come from a particular source in the environment.

- An approximation for light that has been reflected so many times that its original source can’t be identified.

- Ambient light illuminates all objects in a scene equally.

- Spot lights:

- A light that emits a cone of illumination.

- A spotlight is similar to a point light in that it has a position in 3D space, and light radiates from that position.

- However, the light only affects objects that are in the spotlight’s cone of illumination.

- Directional lights:

5.2 Building Objects¶

See the notes of unit 2.

5.3 Other Features¶

see the notes of unit 2.

Computer Graphics through OpenGL: From Theory to Experiments 3¶

- Read Chapter 2 (Sections 2.1, 2.2, 2.3, 2.4, 2.6, 2.11),

- The 3D-to-2D projection step, absent for 2D graphics, is computation-intensive.

- Jurassic Park (1995) was the first completely computer-generated movie.

- OpenGL, the open-standard cross-platform and cross-language 3D graphics API, by Silicon Graphics Inc. (SGI) in 1992.

2.2 Orthographic Projection, Viewing Box and World Coordinates¶

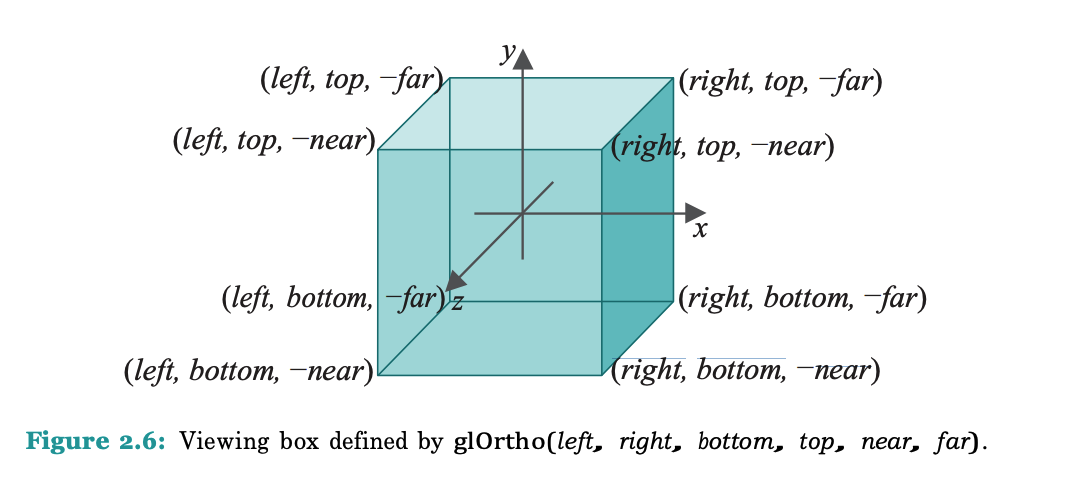

- To define an Orthographic projection:

- You need to specify the left, right, bottom, top, near, and far clipping planes.

- Call

glOrtho(left, right, bottom, top, near, far).

- Viewing Box:

- The viewing volume is a rectangular parallelepiped (a box) with the near and far clipping planes as the front and back faces.

- The left, right, bottom, and top clipping planes are the sides of the box.

- Hence, the vertices of the box are at (left, bottom, -near), (right, bottom, -near), (right, top, -near), (left, top, -near), (left, bottom, -far), (right, bottom, -far), (right, top, -far), and (left, top, -far).

- World Space: The coordinate system in which the objects are defined (shot or modeled).

- Screen Space: The coordinate system in which the objects are displayed (printed).

- Continue on unit 2.

Basics and Elements of Computer Graphics & Creating Graphics with OpenGL 4¶

1. Basics and Elements of Computer Graphics¶

- Pixel: The smallest unit of a digital image.

- Resolution: The number of pixels in an image.

- Raster: A rectangular pattern of parallel lines.

- Bitmap: A representation in which each item corresponds to one or more bits of information.

- Vector: A quantity having direction and magnitude, especially as determining the position of one point in space relative to another.

- Computer graphics can be classified into two categories: Raster (Bitmap) graphics and Vector (Object-oriented) graphics.

- Raster (Bitmap) Graphics:

- Pixel-based graphics where each pixel is modified individually.

- Easy to create, edit, and display on monitors (monitors are already pixel-based arrays).

- Can not be scaled without losing quality, as resolution is fixed.

- Vector (Object-oriented) Graphics:

- Object-based graphics where objects are defined by mathematical equations.

- Can be scaled without losing quality.

- Images have smooth edges and composed of lines, curves, and polygons.

- Hard to use in realistic images like photographs as colors differ from pixel to pixel.

- Applications of Computer Graphics:

- Computer-Aided Design (CAD): designs of buildings, cars, etc.

- Computer-Aided Manufacturing (CAM): designing and controlling manufacturing processes.

- Computer Art: creating art using computers.

- Entertainment: movies, games, etc.

- Education: simulations, virtual reality, etc.

- Image Processing: enhancing images, removing noise, etc. (used in medical imaging, satellite imaging, etc.).

- Graphical User Interface (GUI): user-friendly interfaces.

- Computer Graphics Elements (Primitives):

- Polyline:

- A connected sequence of straight line segments; open or closed.

- Attributes: color, width (thickness), and style (solid, dashed, etc.).

- Each two line segments is called an edge, and each edge has two endpoints.

- Each two edges intersect with a common endpoint is called a vertex.

- Polygon:

- A closed polyline.

- Simple polygon: a polygon that does not intersect itself.

- Text:

- A sequence of characters.

- Some graphic devices have: text mode and graphics mode.

- Filled Regions:

- A region enclosed by a polygon, and filled with a color or a pattern.

- Polyline:

2. Picture Transformations¶

- See the notes of unit 2.

3. Creating Graphics with OpenGL¶

- See the notes of unit 2.

A Short Introduction to Computer Graphics 5¶

- Aliasing: The effect of jagged edges in a digital image.

- AntiAliasing uses intermediate gray levels to “smooth” the appearance of the line.

- Occlusions: The effect of one object blocking another object from view.

- Ray tracing: send a light ray from the eye to each pixel on the screen and compute the first intersection with an object, while later intersections are ignored (hidden behind the first object).

- Z-buffering: a technique involves storing the depth of each pixel in the frame buffer, and when a new pixel is drawn, it is compared with the depth of the pixel already in the frame buffer, and the pixel with the smaller depth is kept.

- Both ray tracing and z-buffering are used to solve the hidden surface problem or apply occlusions.

Coordinates and Transformations 6¶

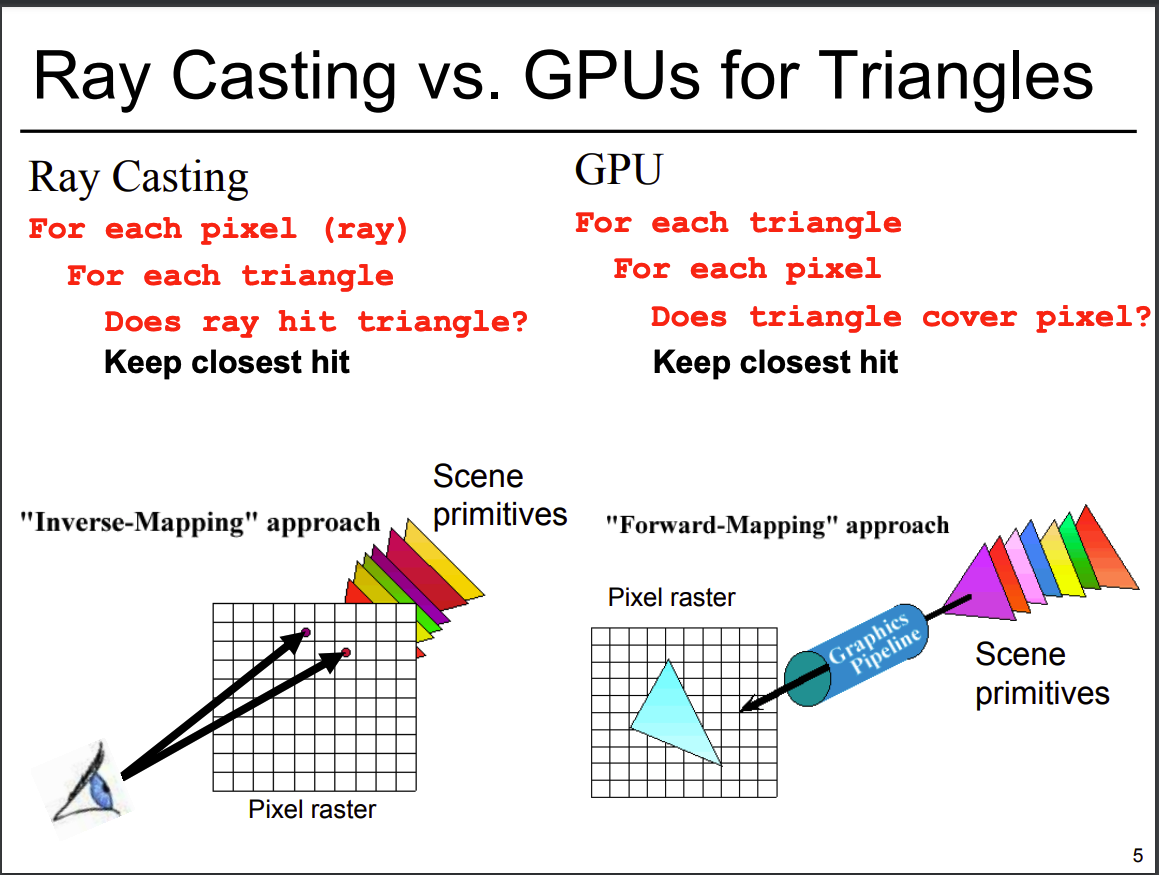

- Rasterization: The process of taking a triangle and figuring out which pixels it covers. It requires:

- Vertices information (position, color, etc.).

- Compute illumination values to adjust the color of the pixels.

- Compute visibility by checking the depth of the pixels, and if there are another pixel with smaller depth, the pixel is hidden.

- A rasterization-based renderer can stream over the triangles, no need to keep entire dataset around – Allows parallelism and optimization of memory systems.

- Hierarchical rasterization tests blocks of pixels before going to per-pixel level (can skip large blocks at once).

Perspective Matrix & Orthographic Camera View 7 8¶

const viewSize = 900;

const aspectRatio = window.innerWidth / window.innerHeight;

const camera = new THREE.OrthographicCamera((-aspectRatio * viewSize) / 2, (aspectRatio * viewSize) / 2, viewSize / 2, -viewSize / 2, 0.1, 1000);

- Axis:

- x: left to right (positive to the right).

- y: bottom to top (positive to the top).

- z: out the screen (negative away from the screen).

const cameraControls = new THREE.OrbitControls(camera, renderer.domElement);

cameraControls.target.set(0, 0, 0);

cameraControls.update();

cameraControls.target.set(x, y, z): The point the camera is looking at.camera.position.set(x, y, z): The position of the camera.

Viewing and Transformations 9¶

- The eye space coordinate is at (0.0, 0.0, 0.0).

- The GLU library provides the

gluLookAt()function, which takes an eye position, a position to look at, and an up vector, all in object space coordinates. - The

gluLookAt()function computes the inverse camera transform according to its parameters and multiplies it onto the current matrix stack.

Unit 1 Lecture 1: Overview of Computer Graphics 10¶

- A printer is a computer graphics device because it uses hardware, software, and applications to create visual output.

- Frame buffer: a region of memory that contains array representation of all pixels on the screen.

- CRT: Cathode Ray Tube:

- It is an old way used in monitors to display images.

- It relies on electron beams and magnetic fields that position the beams to hit the phosphor-coated screen which glows on a specific color depending on the beam details (intensity, color, etc.).

- To show motion, the frame buffer is updated multiple times per second.= according to the FPS (frames per second) rate.

- History of Computer Graphics:

- 1950 - 1960:

- Graphics were used for scientific and engineering applications.

- Displaying outputs on strip charts, pen plotters, and the use A/D converters.

- Cost of CRT refresh was high: computers we slow, expensive, and unreliable.

- 1960 - 1970:

- WireFrame graphics, display processors, and storage tubes were introduced.

- Sketchpad was developed by Ivan Sutherland at MIT (1963).

- 1970 - 1980:

- Raster graphics, workstations, and PCs were introduced.

- The beginning of graphics standards and APIs:

- GKS (Graphical Kernel System).

- Core Graphics System.

- From wireframes to filled polygons.

- 1980- 1990:

- 3D graphics, color, and texture mapping were introduced.

- Realism comes to computer graphics.

- Smooth shading, environment mapping, and bump mapping (putting texture on objects without additional polygons) were introduced.

- Ray tracing (tracing the path of light rays and how they interact with objects) and radiosity (light bouncing off surfaces) were introduced.

- New special purpose hardware was introduced:

- Silicon Graphics Geometry Engine: VLSI chip for 3D graphics.

- Networked graphics and Human-Computer Interaction (HCI) were introduced.

- New industry standards:

- PHIGS (Programmer’s Hierarchical Interactive Graphics System).

- RenderMan.

- 1990 - 2000:

- OpenGL and DirectX were introduced.

- WebGL is the implementation of OpenGL ES 2.0.

- New hardware capabilities:

- Texture mapping.

- Blending.

- Accumulation buffer.

- Stencil buffer.

- 2000 >:

- Photorealism and real-time rendering.

- Graphic cards are now more powerful than CPUs.

- Game boxes and game players are important in the graphics industry.

- Movies and TV shows are now using computer graphics.

- Programmable pipelines and shaders were introduced.

- CAD: Computer-Aided Design and CAM: Computer-Aided Manufacturing are now using computer graphics.

- 1950 - 1960:

- Today graphic standards:

- OpenGL.

- DirectX.

Unit 1 Lecture 2: Viewing and Projections 11¶

- Functions of computer graphics:

- Modeling:

- Defining the scene in world coordinates.

- Viewing and Projections:

- Defining the viewpoint, or how the scene is to be viewed.

- Viewing is where you place the observer in the scene.

- A camera is used to represent the observer.

- Projection is where you specify how the observer’s view is created (the frame of viewport):

- Where the camera is placed.

- The characteristics of the camera.

- Rendering:

- Converting the scene into an image.

- Mapping the scene to the screen space.

- Hidden surface removal: removing objects that are behind other objects relying on the z-value of the objects.

- Double buffering:

- To avoid flickering and improve the performance of the rendering process.

- Maintain two frame buffers: one for the current frame and one for the next frame.

- Display the ready (current) frame buffer while the next frame buffer is being rendered (computed).

- Modeling:

- Specifying a View:

- Place the observer in the world with specific information:

- Location: the eye point.

- Direction: the look-at point.

- Orientation (what’s up, what’s down, left, right, etc.).

- Project the scene onto a 2D plane with specific information:

- Field of view (FOV):

- Zoomed out: wide FOV.

- Zoomed in: narrow FOV.

- Aspect ratio.

- Near and far clipping planes.

- Field of view (FOV):

- Place the observer in the world with specific information:

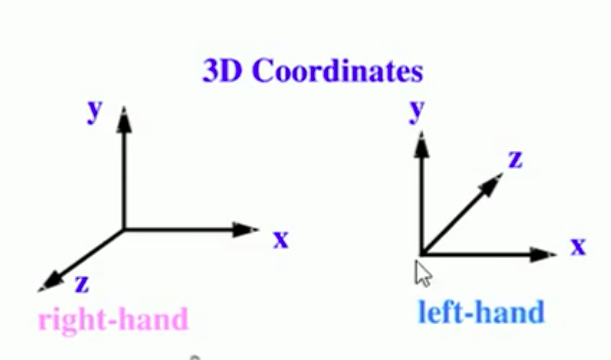

- Standard Viewing Point:

- x: left to right (positive to the right).

- y: bottom to top (positive to the top).

- z:

- left-handed: into the screen (positive towards the screen, away from the viewer).

- right-handed: out the screen (positive towards the viewer, closer to the viewer).

- The viewer can not see beyond the near and far clipping planes.

- Clipping: the process of limiting the visible world to what the viewing surface (window) can display, thus removing objects that are outside the viewing frustum from the scene, thus reducing the amount of data that needs to be processed.

The JavaScript Programming Language 12 13¶

- You should try to use async functions and await when possible. You should only occasionally have to use then() and catch().

- JavaScript was first developed by Netscape (the predecessor of the Firefox web browser) at about the same time that Java was introduced, and the name JavaScript was chosen to ride the tide of Java’s increasing popularity.

- The standardized language is officially called ECMAScript, but the name is not widely used in practice, and versions of JavaScript in actual web browsers don’t necessarily implement the standard exactly.

Reading Summary¶

| ID | Reference | Done |

|---|---|---|

| 1 | 1 Introduction | ✅ |

| 2 | 2 Ch1: Introduction to Computer Graphics | ✅ |

| 3 | 2 Ch5: Three.js; A 3-D Scene Graph API | 🟥 |

| 4 | 3 Computer Graphics through OpenGL: From Theory to Experiments | 🟨 |

| 5 | 4 Basics and Elements of Computer Graphics & Creating Graphics with OpenGL | 🟥 |

| 6 | 5 A Short Introduction to Computer Graphics | ✅ |

| 7 | 6 Coordinates and Transformations | ✅ |

| 8 | 7 Perspective Matrix | ✅ |

| 9 | 8 Orthographic Camera View | ✅ |

| 10 | 9 Viewing and Transformations | 🟨 |

| 11 | 10 Lecture 1: Overview of Computer Graphics | ✅ |

| 12 | 11 Lecture 2: Viewing and Projections | ✅ |

| 13 | 12 JavaScript 1 | 🟥 |

| 14 | 13 JavaScript 2 | ✅ |

- ✅: Completed.

- 🟨: Resource was read quickly; maybe read it again.

- 🟥: Resource was partially read; MUST go back and finish it.

References¶

-

University of the People. (2024). CS4406: Computer Graphics. Unit 1: Introduction to Graphics. https://my.uopeople.edu/mod/book/view.php?id=444238&chapterid=540576 ↩↩

-

Eck, D. (2018). Introduction to Computer Graphics, v1.2. Hobart and William Smith Colleges. https://my.uopeople.edu/pluginfile.php/1928357/mod_book/chapter/540572/CS%204406Eck-graphicsbook.pdf Chapter 1 - Introduction Chapter 5 - Three.js; A 3-D Scene Graph API ↩↩↩↩

-

Guha. S. (2019). Computer graphics through OpenGL: From theory to experiments, 3rd edition. https://my.uopeople.edu/pluginfile.php/1928357/mod_book/chapter/540572/CS4406_Guha%20reading.pdf Read Chapter 2 (Sections 2.1, 2.2, 2.3, 2.4, 2.6, 2.11), ↩↩

-

Mbise, M. (2017). Computer graphics. African Virtual University (AVU). https://my.uopeople.edu/pluginfile.php/1928357/mod_book/chapter/540572/CS4406_R1%20computer%20graphics%20%281%29.pdf Read Unit 1: Basics and Elements of Computer Graphics Read Unit 3: Creating Graphics with OpenGL ↩↩

-

Durand, F. (n.d.). A Short Introduction to Computer Graphics. MIT Laboratory for Computer Science. http://people.csail.mit.edu/fredo/Depiction/1_Introduction/reviewGraphics.pdf ↩↩

-

Massachusetts Institute of Technology (2020). Coordinates and Transformations. MITOpenCourseware. https://ocw.mit.edu/courses/electrical-engineering-and-computer-science/6-837-computer-graphics-fall-2012/lecture-notes/ Read lecture #21, Graphics Pipeline and Rasterization ↩↩

-

Udacity. (2015, February 23). Perspective matrix - Interactive 3D graphics [Video]. YouTube. https://www.youtube.com/watch?v=yrwCdD1dpvE ↩↩

-

Udacity. (2015, February 23). Three.js orthographic camera view - Interactive #D graphics [Video]. YouTube. https://www.youtube.com/watch?v=k3adBAnDpos ↩↩

-

Viewing and Transformations. Open GL. https://www.khronos.org/opengl/wiki/Viewing_and_Transformations ↩↩

-

University of the People. (2024). CS4406: Computer Graphics. Unit 1 Lecture 1: Overview of Computer Graphics. https://my.uopeople.edu/mod/kalvidres/view.php?id=444243 ↩↩

-

University of the People. (2024). CS4406: Computer Graphics. . Unit 1 Lecture 2: Viewing and Projections https://my.uopeople.edu/mod/kalvidres/view.php?id=444244 ↩↩

-

Introduction to Computer Graphics, Section A.3 – The JavaScript Programming Language. (2024). Hws.edu. https://math.hws.edu/graphicsbook/a1/s3.html ↩↩

-

Introduction to Computer Graphics, Section A.4 – JavaScript Promises and Async Functions. (2024). Hws.edu. https://math.hws.edu/graphicsbook/a1/s4.html ↩↩