2. Agents¶

Introduction 1¶

- An agent perceives its environment through sensors.

- The complete set of inputs at a given time is called a percept.

- The current percept, or a sequence of percepts, can influence or provide input into the actions of an agent.

- The agent can change the environment through actuators or effectors.

- These actuators or effectors may be physical or simulated depending upon the nature of the agent.

- Embodied agents, for example, have physical actuators that can effect change in the environment of the real world.

- Software agents, on the other hand, have simulated actuators and can affect change within the virtual environment in which they operate.

- There are many examples of software agents. One example is the idea of a non-player character (NPC) in a video game. This is a simulated agent that is purposive and is designed to act with reason.

- An operation involving an effector is called an action.

- Actions can be grouped into action sequences.

- Agents can have goals that they try to achieve. These types of agents are called purposive agents.

- Thus, an agent can be looked upon as a system that implements a mapping from percept sequences to actions.

- An autonomous agent decides autonomously which action to take in the current situation to maximize progress towards its goals.

- An autonomous agent is one that makes decisions and acts on its own according to the actions it is capable of performing and the rules of reasoning or decision making that it is operating under.

- Based on this understanding, we can define an agent using these 4 rules:

- Ability to perceive the environment.

- Observations used to make decisions.

- Decisions will result in actions.

- Ability to become rational, that is, to take the best action given the information available.

- Types of AI environments:

- Fully observable vs. partially observable:

- Fully means the agent can see the entire state of the environment at every point in time.

- E.g., chess board is fully observable, while poker is partially observable.

- Deterministic vs. stochastic:

- Deterministic means the next state of the environment is completely determined by the current state and the action taken.

- Stochastic means there is some randomness in the environment.

- E.g., 8-puzzle is deterministic, while self-driving car is stochastic.

- Static vs. dynamic:

- Static means the environment does not change while the agent is deliberating.

- E.g., speech analysis is static, while drone vision is dynamic.

- Discrete vs. continuous:

- Discrete means there are a finite number of states.

- E.g., chess board is discrete, while self-driving car is continuous.

- Single-agent vs. multi-agent:

- Single-agent means there is only one agent in the environment.

- Fully observable vs. partially observable:

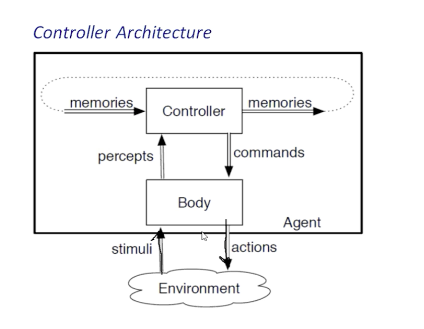

- Agent Architecture and Hierarchical Control:

- Agent = body + controller.

- The body can be physical or virtual.

- The body is the part of the agent that interacts with the agent’s environment.

- The body has both sensors and actuators.

- Sensors are the capabilities of the agent that collect stimuli from the environment.

- Actuators provide the agent with the ability to affect change within the environment that it operates.

- The controller accepts the stimuli sensed by the body in the form of percepts which are data values created from the stimuli that are sensed by the body.

- The controller is the portion of the agent that must ‘reason’ how to respond to the stimuli to achieve the goals set for the agent to accomplish.

- Such reasoning takes the form of actions that the agent much effect within its environment which is done by sending commands to the body directing the actions of its actuators.

Agent Architectures and Hierarchical Control 2¶

2.1 Agents and Environments¶

- A body can also carry out actions that don’t go through the controller, such as a stop button for a robot and reflexes of humans.

- Common sensors for robots include touch sensors, cameras, infrared sensors, sonar, microphones, keyboards, mice, and XML readers used to extract information from webpages.

- As a prototypical sensor, a camera senses light coming into its lens and converts it into a two-dimensional array of intensity values called pixels.

- Commands include:

- Low-level commands such as to set the voltage of a motor to some particular value.

- High-level specifications of the desired motion of a robot such as “stop” or “go to room 103”.

- Time is Discrete. Let T be the set of time points. Assume that T is totally ordered.

- Suppose P is the set of all possible percepts. A percept trace, or percept stream, is a function from T into P. It specifies which percept is received at each time.

- Suppose C is the set of all commands. A command trace is a function from T into C. It specifies the command for each time point.

- A transduction is a function from the history of an agent at time t to the command at time t.

- Thus a transduction is a function from percept traces to command traces that is causal in that the command at time t depends only on percepts up to and including time t.

- A controller is an implementation of a transduction.

- Although a transduction is a function of an agent’s history, it cannot be directly implemented because an agent does not have direct access to its entire history. It has access only to its current percepts and what it has remembered.

- The memory or belief state of an agent at time t is all the information the agent has remembered from the previous times.

- An agent has access only to the part of the history that it has encoded in its belief state.

- Thus, the belief state encapsulates all of the information about its history that the agent can use for current and future commands.

- At any time, an agent has access to its belief state and its current percepts.

- A belief state transition function for discrete time is a function

remember:S×P→Swhere S is the set of belief states and P is the set of possible percepts. - A command function is a function

command:S×P→Cwhere C is the set of commands.

\[

S_{t+1} = remember(S_t, P_t) \\

S_t = command(S_t, P_t)

\]

- The belief-state transition function and the command function together specify a controller for the agent.

2.2 Hierarchical Control¶

- Each layer sees the layers below it as a virtual body from which it gets percepts and to which it sends commands.

- The lower-level layers run much faster, react to those aspects of the world that need to be reacted to quickly, and deliver a simpler view of the world to the higher layers, hiding details that are not essential for the higher layers.

- The planning horizon at lower levels is typically much shorter than the planning horizon at upper levels.

- Humans have two distinct levels:

- System 1, the lower level, is fast, automatic, parallel, intuitive, instinctive, emotional, and not open to introspection.

- System 2, the higher level, is slow, deliberate, serial, open to introspection, and based on reasoning.

- There are three types of inputs to each layer at each time:

- Features coming from the belief state: history, or previous values of these features.

- Features coming from the percepts of the layers below.

- Features coming from the commands of the layers above.

- There are three types of outputs from each layer at each time:

- Higher-level precepts sent to the layers above.

- Lower-level commands sent to the layers below.

- Next values of the features of the belief state

- There are three types of functions at each layer:

- Remember: a belief-state transition function. \(remember: S×P_l×C_h→S\)

- Command: a command function. \(command:S×P_l×C_h→C_l\)

- Tell: a precept function. \(tell:S×P_l×C_h→P_h\)

- Where:

- \(P_l\) is the set of percepts from the layers below.

- \(C_h\) is the set of commands from the layers above.

- \(C_l\) is the set of commands to the layers below.

- \(P_h\) is the set of precepts to the layers above.

- \(S\) is the set of belief states.

- \(P\) is the set of possible percepts.

- \(C\) is the set of possible commands.

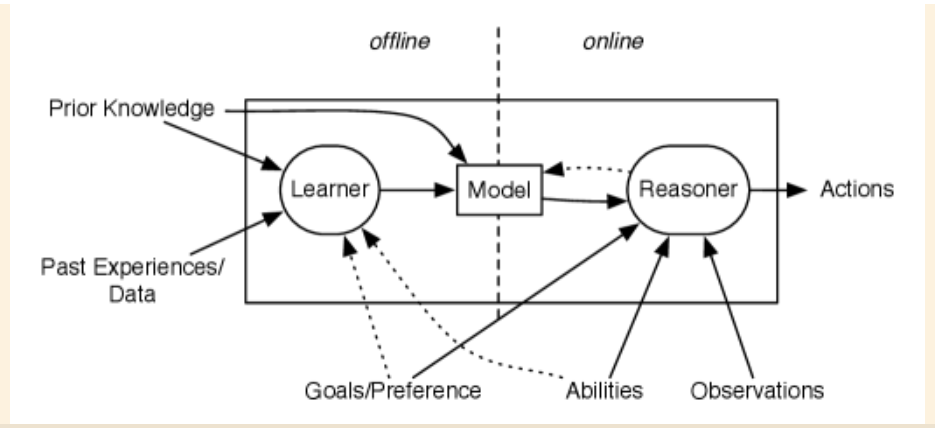

2.3 Designing Agents¶

- Time and features can be discrete or continuous (real numbers).

- High-level reasoning, as carried out in the higher layers, is often discrete and qualitative.

- Low-level control, as carried out in the lower layers, is often continuous and quantitative.

- A controller that reasons in terms of both discrete and continuous values is called a hybrid system.

- Qualitative reasoning uses discrete values, which can take a number of forms:

- Landmarks: exact qualitative values that describe the state of the world. E.g., “a cup is empty, full, upside down, etc.”.

- Orders-of-magnitude: approximate qualitative values that describe the state of the world. E.g., “a cup is almost empty, half full, almost full, etc.”.

- Qualitative derivatives: describe the rate of change of a qualitative value. E.g., “liquid in a cup is increasing, decreasing, or constant”.

- A flexible agent needs to do qualitative reasoning before it does quantitative reasoning.

- For simple agents, the qualitative reasoning is often done at design time, so the agent needs to only do quantitative reasoning online.

- Sometimes qualitative reasoning is all that is needed.

- An intelligent agent does not always need to do quantitative reasoning, but sometimes it needs to do both qualitative and quantitative reasoning.

- Discrete features with continuous time provide an implicit discretization of time; there can be a next time whenever the state changes.

- This process of maintaining the state by forward prediction is known as dead reckoning; the agent is reckoning its future state from its current state without any new percepts.

- A purely reactive agent is one that does not have a belief state and does not remember anything from the past; it only reacts to the current percept.

| Agent Type | Belief State | Precept | Comment |

|---|---|---|---|

| Purely reactive | No | Yes | Only reacts to current percept |

| Dead reckoning | Yes | No | Predicts future state from current state |

- Dead reckoning may be appropriate when the world is fully observable and deterministic.

- When there are unpredictable changes in the world or when there are noisy actuators (e.g., a wheel slips, the wheel is not of exactly the diameter specified in the model, or acceleration is not instantaneous), the noise accumulates, so that the estimates of position soon become very inaccurate, hence reactive control is needed.

- Sensors:

- Passive: continuously feed information to the agent.

- Active: the agent must request information from the sensor.

Unit 2 Lecture 1 & 2 3 4¶

- The first decision a controller in a layer, is do I process this information or just pass it on to the next layer?

- Layer functions:

- Memory function: remember the current state and save it to the history of belief states.

- Command function: decide what to do next, process the information now and make a decision.

- Precept function: decide what to tell the layer above, pass on the information to the next layer.

References¶

-

Learning Guide Unit 2: Introduction | Home. (2025). Uopeople.edu. https://my.uopeople.edu/mod/book/view.php?id=454691&chapterid=555025 ↩

-

Poole, D. L., & Mackworth, A. K. (2017). Artificial Intelligence: Foundations of computational agents. Cambridge University Press. https://artint.info/3e/html/ArtInt3e.Ch2.html Chapter 2 - Agent Architectures and Hierarchical Control ↩

-

Taipala, D. (2014, September 12). CS 4408 artificial intelligence unit 2 lecture 1 [Video]. YouTube. ↩

-

Taipala, D. (2014, September 12). CS 4408 artificial intelligence unit 2 lecture 2 [Video]. YouTube. ↩