6. Paging and Physical Memory¶

Notes¶

Convert Decimal to Binary¶

- divide the number by 2 and keep the remainder, then repeat the process until the number is 0.

- Example convert 21 to 6-bit binary:

21 / 2 = 10 and 1

10 / 2 = 5 and 0

5 / 2 = 2 and 1

2 / 2 = 1 and 0

1 / 2 = 0 and 1

- construct the binary number from the remainder in reverse order (the first reminder is on the right)

1 0 1 0 1

- fill up the remaining bits with 0s to make it n bits (6 bits in this case)

0 1 0 1 0 1

- final result:

21 = 010101

Convert Binary to Decimal¶

- start from the right, multiply each bit by 2^i, where i is the position of the bit (starting from 0 on the right).

- Example convert 0101 to decimal:

0101 = 1 * 2^0 + 0 * 2^1 + 1 * 2^2 + 0 * 2^3 = 5

18. Paging 1¶

- There are two approaches to memory management: segmentation and paging.

- Segmentation:

- chopping up the virtual address space into segments with variable sizes.

- When dividing things into different-sized segments, the space itself becomes fragmented, and memory allocation becomes challenging.

- Paging:

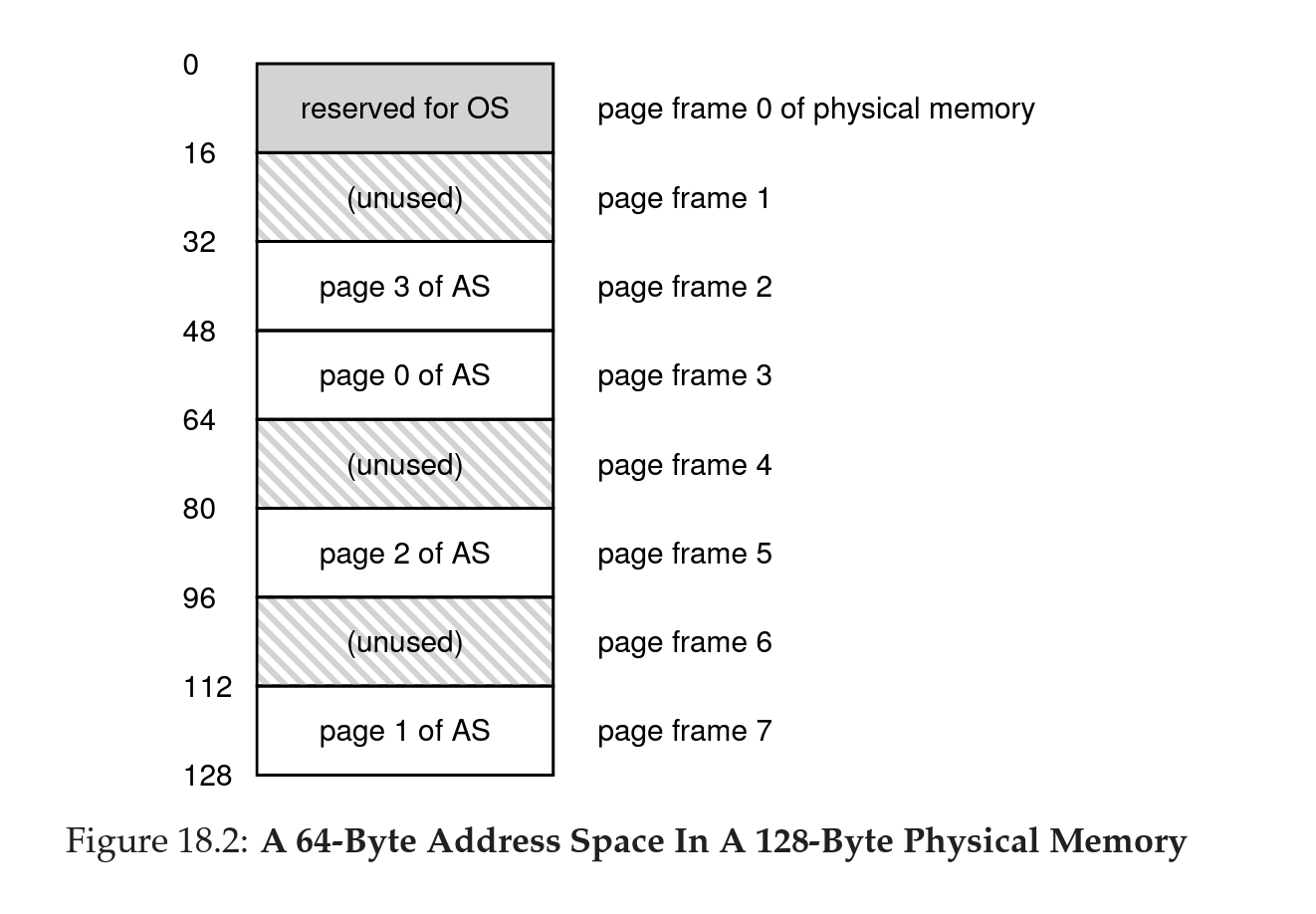

- chopping up address space into fixed-size pages or frames of pages.

- Virtual address space is divided into pages of equal size.

- Physical memory is divided into frames of equal size.

- Process address space is divided into non-contiguous fixed-size pages (the pages of a single process can be scattered across the physical memory).

- Advantages of pages:

- flexibility. managing the process address space becomes easier, regardless of how the process is using the address space.

- simplicity. the hardware can be designed to be simpler, when a new page is needed, the hardware can simply allocate a new page from the free list (keeps track of all free pages of the memory).

Page table¶

- Page table. is a per-process data structure that manages virtual-to-physical address translations, mapping virtual page numbers to physical frame numbers.

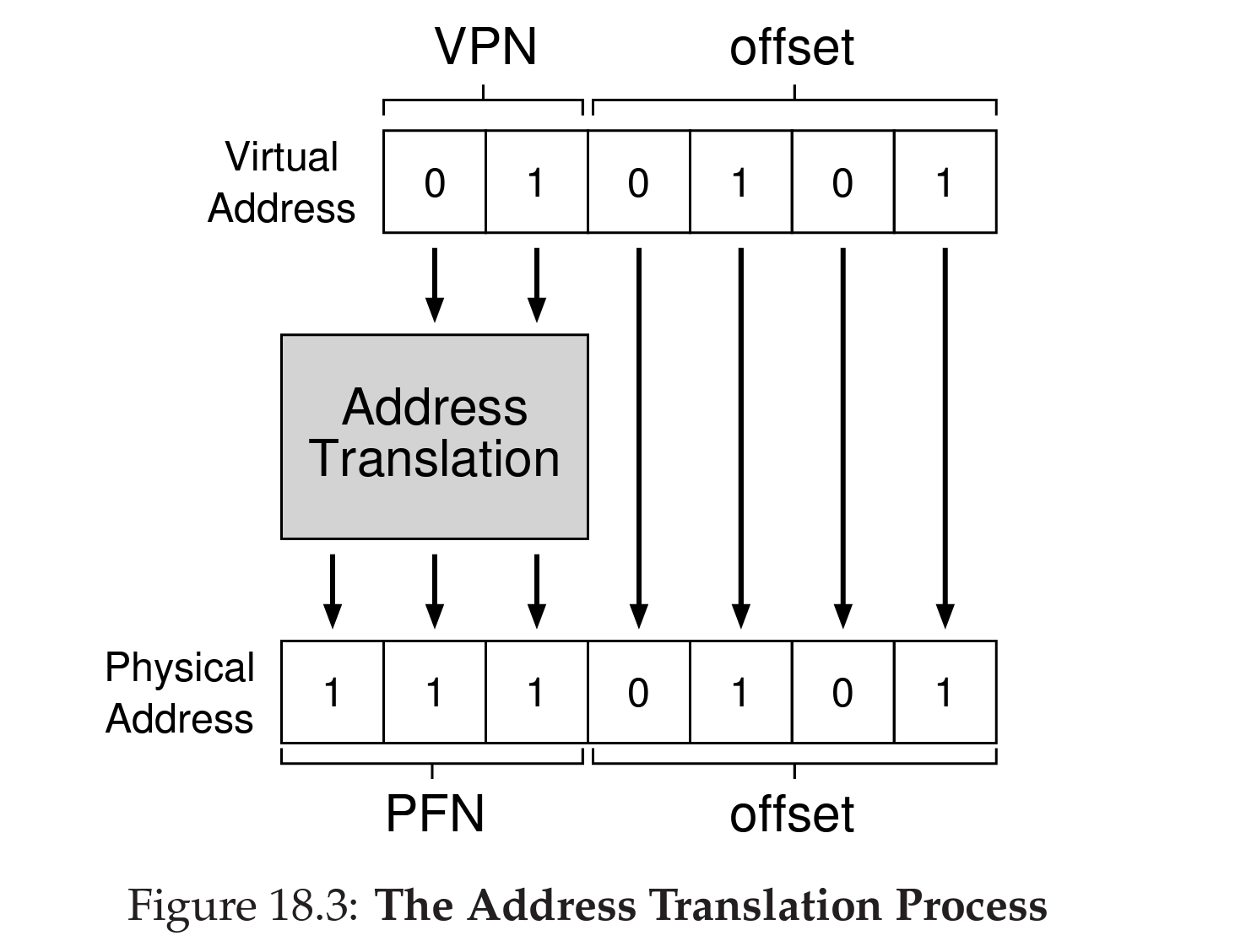

- address translation requires us to convert the virtual address to a virtual page number and an offset.

- PFN (physical frame number) = PPN (physical page number) = absolute address of the page in the physical memory.

- The offset stays as it is.

- PTE (page table entry) requires 4 bytes to store data on a 32-bit machine, that’s 1MB for each 1KB page.

- Page tables are stored in the main memory (for now).

-

Forms of Page table:

- linear page table. a single array of PTEs, indexed by the virtual page number. as

PTE[]wherePTES[VPN] = { validBit, PPN }.

- linear page table. a single array of PTEs, indexed by the virtual page number. as

-

Contents of PTE:

- valid bit:

- indicates whether the page is valid or not.

- helps in preventing accidental access to the wrong memory addresses.

- When a process starts, all pages are invalid, and the OS will load the pages into the memory as needed.

- Protection bits:

- organize the access rights of the page (read, write, execute).

- Prevents the process from accessing the memory it is not supposed to access.

- present bit:

- indicates whether the page is in the physical memory or not (in the swap space, aka. disk).

- When the page is not in the memory, the OS will load the page into the memory as needed.

- dirty bit:

- indicates if the page has been modified or not (since the last time it was loaded into the memory).

- reference bit:

- Aka. accessed bit.

- Indicates if the page has been referenced or not (accessed since the last time it was loaded into memory).

- Important during page replacement; to check if the page is being used or not.

- valid bit:

19. Paging: Faster Translations (TLBs) 2¶

- Paging - (chopping up the address space into a lot of pages of equal size) requires a large amount of mapping information and adds high overhead to the address translation process which reduces performance.

- A faster approach is to use a Translation Lookaside Buffer (TLB) which is part of the MMU (memory management unit).

- TLB - (aka, Address Translation Cache), is a small cache that stores the most recently used page table entries. Upon virtual address translation, the TLB is checked first, if the entry is not found, the TLB will miss and the page table will be checked. If the entry is found, the TLB will hit and the translation is done quickly saving the slow process of searching the page table.

- Hit rate - the number of TLB hits divided by the total number of address translations.

- spatial locality- Things are physically close to each other in memory (e.g. sequential access to an array).

- Temporal locality - Things are accessed again and again over a short time (e.g. a loop that accesses the same array element over and over again).

- TLB management:

- TLB is managed by the hardware. Intel x86 and other CISC (complex instructions sets computers); where the base of the page table is stored in the CR3 register, and the CR3 register is stored in the TLB.

- TLB is managed by the OS. more modern computers (SPARC v9) and RISC (reduced instruction set computers); where the OS will manage the TLB entries. On a TLB miss, the hardware raises an interrupt to the OS, The OS trap handler will search the page table and update the TLB entry.

- TLB miss trap handler differs from regular trap handlers:

- Regular trap handlers return the control to the next instruction after the instruction that caused the trap. TLB miss trap handlers return the control to the instruction that caused the trap. Thus a different PC value needs to be saved in both cases.

- TLB miss trap handlers must deal with the case of an infinite chain of TLB misses caused by infinite recursion (The trap handler fails, and returns the control to the same instruction that will fail again).

- TLB Entry contents:

- VPN (virtual page number).

- PFN (page frame number).

- Other bits (valid, protection, present, dirty).

- Reference bit. Address Space Identifier (ASID). (used to distinguish between different processes). = PID (process ID).

- Invalidating the TLB cache uses approaches like a random replacement or least recently used (LRU).

20. Paging: Smaller Tables 3¶

- Page tables can grow very large, and the larger the page table, the more time it takes to search it.

- Suppose we have 32-bit address space, and 4KB (2^12) pages, then we have 2^32 / 2^12 = 2^20 pages, which is 1 million pages. If each page table entry is 4 bytes, then the page table will be 4MB; if we run 100 processes, then the page table will be 400 MB.

- Techniques used to make page table size smaller:

- Bigger page sizes.

- Use Paging and Segmentation together.

- Multi-level Page Tables

Bigger Pages¶

- Let’s take the 32-bit address space (2^32), and change the page size to 16KB (2^14). Now we have 2^32 / 2^14 = 2^18 pages, which is 256K pages. If each page table entry is 4 bytes, then the page table will be 1MB; if we run 100 processes, then the page table will be 100 MB (4 times smaller).

- Larger page sizes may increase internal fragmentation (a page may only use a few bits of the 16KB page).

- Most systems use 4KB or 8KB pages.

- The virtual address will consist of 2 parts:

- Page number (20 bits).

- Offset (12 bits).

Paging and Segmentation together¶

- Instead of having a single page table for the entire address space of a process, we can have a page table for each segment.

- If the process is divided into 3 segments (code, stack, heap) we would have 3 tables for it (code table, stack table, heap table).

- The segment table will contain the base address of each segment; and the segment size.

- The Virtual address will consist of 3 parts:

- Segment number (2 bits).

- Page number (18 bits).

- Offset (12 bits).

- Each table is smaller than the entire page table, and the search time is reduced.

Multi-level Page Tables¶

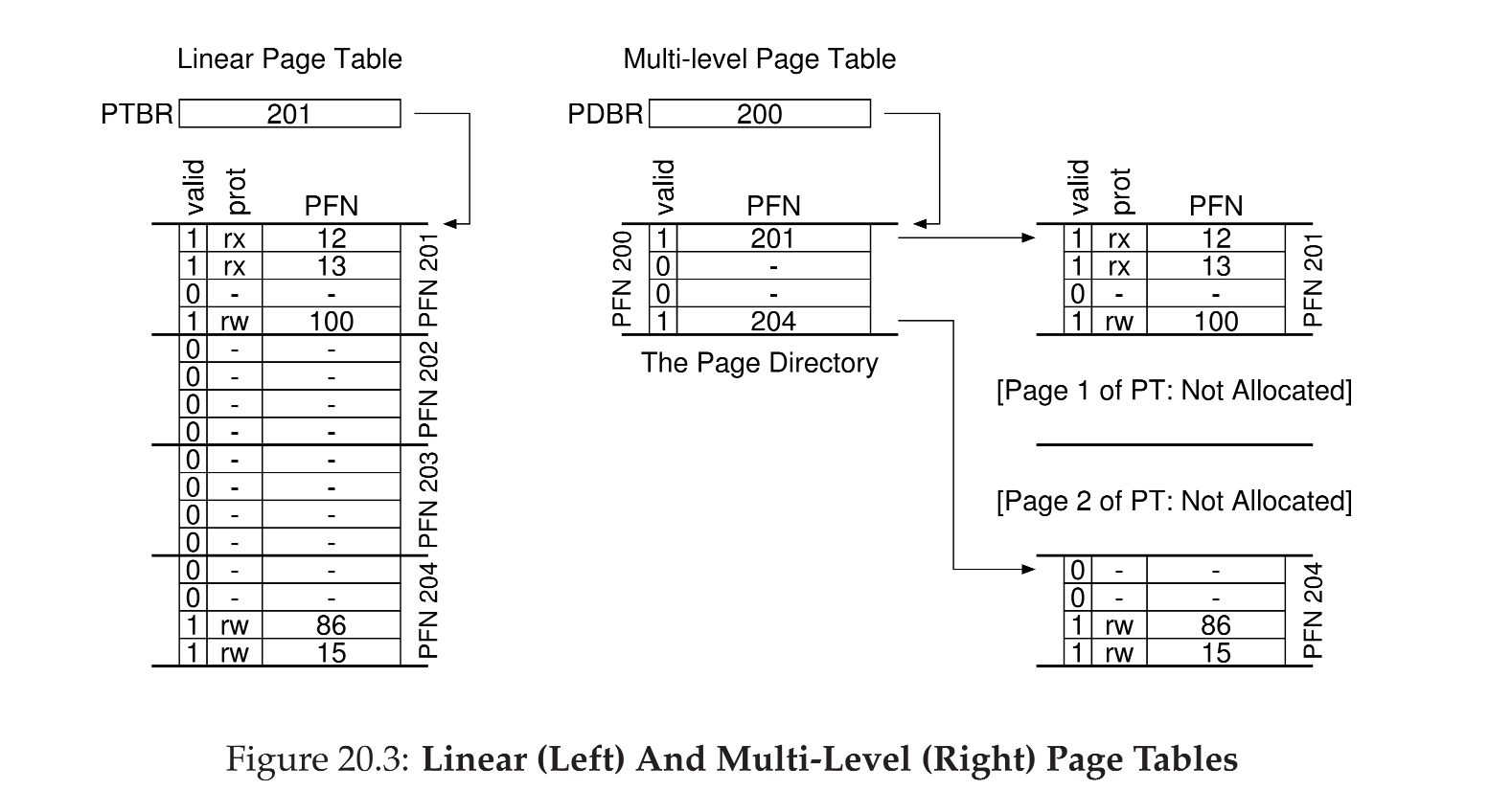

- Used in most modern systems.

- Uses a new data structure called Page Directory; which divides the page tables into smaller sets, and ignores all invalid (not allocated) pages.

- Page Directory:

- A simple two-level page table contains one entry per page of the page table.

- It consists of a number of page directory entries (PDEs).

- Advantages:

- Smaller page tables.

- Faster address translation.

- Support for sparse address spaces.

- The page directory itself does not need to be contiguous, which solves the issue of finding a large contiguous chunk of memory for the page table.

- Disadvantages:

- We reduced the space used by the page table, but this may increase the time needed to search the page directory in case of a TLB miss.

- TLB miss costs more than a linear page table miss.

- The page-bale lookups become more complex.

- The virtual address will consist of 3 parts:

- Page directory index (10 bits).

- Page table index (10 bits).

- Offset (12 bits).

- The lookup will use the page directory index to find the page directory entry, and the page table index to find the page table entry.

- If the page directory entry is invalid, then the page table is not allocated, and the page fault will be raised.

- The exception handler will allocate the page table, and update the page directory entry.

- More complex multi-level page directories can be used, and he page directory index can be divided to handle more levels, where the virtual address will like this:

[level 1 index][level 2 index]...[level n index][page table index][offset]

//|................15 bits....................|.......10 bits....|.. 7bits|

Inverted Page Tables¶

- A single page table that has an entry for each physical page of the system, each PTE entry has PID field.

Swapping the Page Tables to Disk¶

- We assumed that all page tables are saved in kernel-owned physical memory.

- Page tables can also be swapped out to kernel owned virtual memory (aka. kernel swap space).

¶

¶

21. Beyond Physical Memory: Mechanisms 4¶

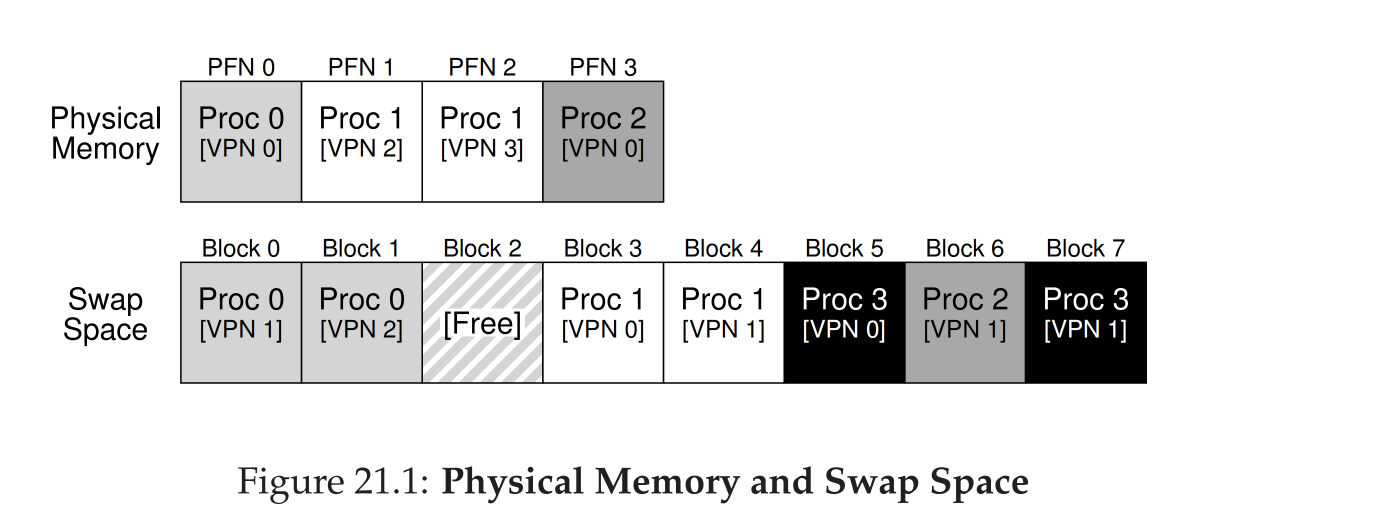

Swap Space¶

- Swap space - is some disk space reserved for swapping pages out of memory.

The Present Bit¶

- Present Bit - is a part of the PTE that indicates whether the page is in memory or not. If Present Bit is 1, the page is in memory and can be locked up using the VPN.

- If Present Bit is 0, the page is not in memory and must be swapped in, and the hardware must raise a page fault and the page fault handler will be called.

- Page Fault Handler:

- It is a part of the OS; even in hardware managed TLBs, the OS page fault handler is called.

- The handler looks in the PTE to find the page address in the swap space (disk) and swaps it into memory.

- After swapping in, the page table is updated with the new memory address of the page and the present bit is set to 1.

- The page fault handler returns to the instruction that caused the page fault, and the instruction is re-executed.

- With the new execution, it will generate a TLB miss, but the page is now in memory and the page fault will not occur again.

- Page os looked up and TLB is updated with the new page address and the VPN.

- When the page fault handler tries to swap in a page, if the memory is full, it must swap out another page to make room for the new page. This called page replacement policy.

Page Replacement Policies¶

- Page Replacement starts when:

- Passively - when the page fault handler tries to swap in a page, but the memory is full.

- Actively- OS tries to keep certain amount of physical memory free for future use.

- Active Page replacement:

- OS uses high watermark HM and low watermark LM to determine when to start page replacement.

- When Os detects that memory has less than LM free pages, it starts page replacement (starts swap daemon or page daemon).

- Swap daemon is a process that runs in the background and swaps pages out of memory.

- Swap daemon swaps pages out of memory until the memory has more than HM free pages.

22. Beyond Physical Memory: Policies 5¶

- In theory, the main memory (physical RAM) is a cache for the virtual memory (disk).

- Cache hit happens when the page is in memory (less expensive), and cache miss happens when the page is not in memory, and the page fault handler is called, and the page is swapped in (more expensive).

- Average memory access time (AMAT) =

Tm + (Pmiss * Td)where:- Tm is the time to access the memory (cache hit) = the cost of accessing the memory.

- Pmiss is the probability of a cache miss = the probability that the page is not in memory, but in the disk = miss rate (%).

- Td is the time to access the disk (cache miss) = the cost of accessing the disk.

- Memory access cost is nanoseconds, and disk access cost is milliseconds, decreasing miss rate will have a huge impact on the AMAT.

- The page replacement policy has a huge impact on the miss rate, so an optimal page replacement policy will have the lowest AMAT; and has a huge impact on the performance of the system.

The optimal page replacement policy¶

- Developed by Belady in 1966, and he called it MIN.

- The optimal page replacement leads to the lowest miss rate (hence the maximum hit rate, and the lowest AMAT), by swapping out the pages that will be needed furthers in the future. This is the optimal policy, but it is not practical.

- If we know the future, we can use the optimal policy, but we don’t know the future, so we can’t use the optimal policy.

- The optimal policy is useful as reference of the performance of other policies.

Cache misses types¶

- The three-cs: cold, capacity, conflict.

- Compulsory miss - this miss can not be avoided, since data is not populated in the cache yet (eg. first time accessing a page), and it is called cold miss.

- Capacity miss - this miss can be avoided by increasing the cache size, and it is called capacity miss, and it is due to the fact that the cache is full, and we can not put more pages in it.

- Conflict miss - happens when there are restriction on putting/accessing pages in the cache; this can not be avoided and the original value must be found.

FIFO page replacement policy¶

- The first page swapped in is the first page swapped out (evicted).

- This has a high miss rate, and it is not practical.

- Increasing the cache size will not help, since the cost of accessing the larger cache size is also higher.

Random page replacement policy¶

- The page to be swapped out is chosen randomly.

- This has a high miss rate, and it is not practical.

- It depends on luck, sometimes it performs well, and sometimes it performs poorly.

- Increasing cache size also will not help.

Least Recently Used (LRU) page replacement policy¶

- It is the most popular page replacement policy.

- LRU uses history to determine which page to swap out, it swaps out the least recently used page.

- If you just accessed a page, it is more likely that you will access it again in the future, so it is less likely to be swapped out.

- Some history metrics used by this algorithm:

- Frequency of access - the more frequent the access, the less likely to be swapped out.

- Recency of access - the more recent the access, the less likely to be swapped out.

- The opposite algorithms also exist:

- Most Frequently Used (MFU) - swaps out the most frequently used page.

- Most Recently Used (MRU) - swaps out the most recently used page.

23. Complete Virtual Memory Systems 6¶

- We will see 2 examples:

- Modern virtual memory manager found in VAx/VMS OS developed in 1970s.

- Linux virtual memory manager.

VAX/VMS Virtual Memory¶

- The VAX-11 minicomputer architecture was introduced in the late 1970’s by Digital Equipment Corporation (DEC).

- VAX-11 has a 32-bit address space per process, 512-byte pages; and thus the virtual address has 23-bit for VPN and 9-bit for offset.

- The first 2 bits of the VPN are used for the segment number (SN), and the remaining 21 bits are used for the page number (PN).

- It is a hybrid system that uses both segmentation and paging.

- The entire memory space is divided into System Space or kernel space (S) and User Space or process space; The system space is shared between all processes.

- Each process has two segments (P0 and P1):

- P0 has the code + heap that grows down.

- P1 has the stack that grows up.

- The multi-level page table is used to map the virtual address to the physical address, where the unused bits of a segment’s pages are not indexed in the page table.

- Each process has 2 page tables, a one for each segment and are saved in the kernel memory space (S).

- The kernel space uses swapping to swap page table out of its kernel to memory to the kernel swap space.

- VAX-11 also uses TLB (Translation Lookaside Buffer) to cache the page table entries.

- PTE contains: valid bit, protection field (4 bits), dirty bit, OS reserved field (5 bits), and PFN. No reference bit.

- Replacement policy: Segmented FIFO, where each process has a max number of pages in memory set by the Resident Set Size (RSS), and the pages are swapped out in a FIFO manner; Only after the process exceeds its RSS.

- Then they edited the replacement policy as: When a process P exceeds its RSS, a page is removed from its per-segment, per-process FIFO if the page is clean (not modified).

- More techniques: demand zeroing and Copy on Write (CoW).

- Demand zeroing - when a process requests a new page, the OS only adds the newly page to the process’s page table and zero it (mark some bits as zeros), however, the actual page allocation is

delayeduntil the next timethispage is accessed. - Copy on Write (CoW) - when a process requests to copy a page to another page, the OS only changes the references to this page, and the subsequential read requests can be mapped to the old location, however, the actual page copy is

delayeduntil the next timethispage is modified(written to). Only at this time, the OS does the actual copy.

The Linux Virtual Memory System¶

- We will study Linux for Intel x86 (most popular).

- Address space is divided into kernel space and user space.

- The kernel virtual address space is divided into 2 parts:

- Kernel Logical addresses where most kernel data structures (like page tables, per process kernel stacks) live; this portions can not be swapped out. This logical address space is mapped to the kernel physical address space eliminating the need for translation, and also contiguous.

- Kernel Virtual Address which is not contiguous and can be swapped out. It is used to allow the kernel to address more than 1GB of memory (this was the limit earlier).

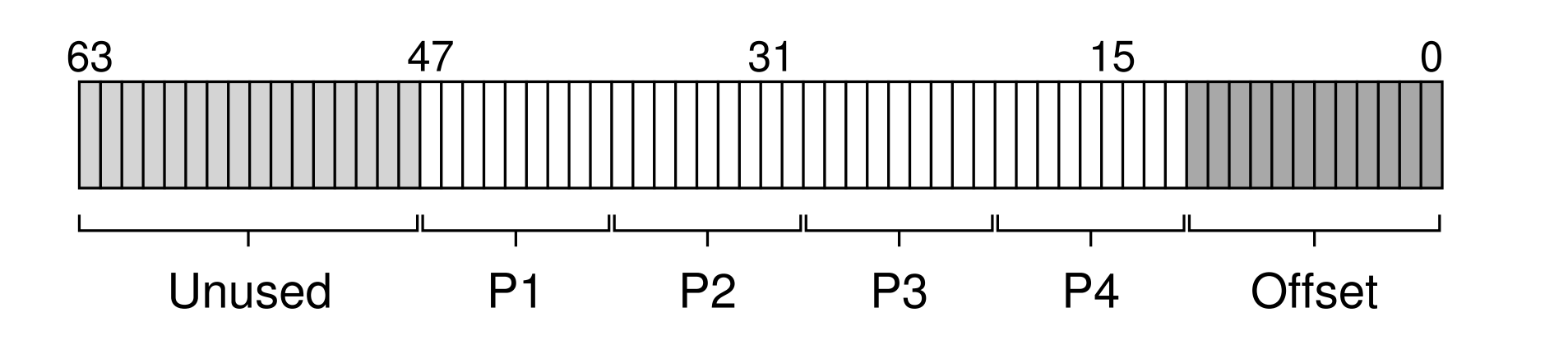

- Linux uses Hardware managed multi-level pre-process page table structure. The page table has 4 levels and it uses only 48 bits of the 64-bit virtual address.

- Page size is 4KB.

- Recent Linux variations support Huge pages (2MB or 1GB) to reduce the number of page table entries.

- Linux uses 2Q page replacement policy. Which is a modified version of LRU that keeps track pages into 2 active/inactive lists.

What is swap memory in Linux 7¶

- swap memory is the dedicated amount of hard drive that is used whenever RAM runs out of memory.

- Paging in windows = Swapping in Linux.

- Types of Swap memory:

- Swap partition. is a dedicated partition on the hard drive that is used for swapping.

- Swap file. is a file on the hard drive that is used for swapping.

- Linux allows for manually setting the swap rate (how frequently the system will swap data to the hard drive) between 0 and 100. default is 60.

- During the process of hibernation, all the contents of RAM are written on the swap memory. Therefore, it is essentially required for the hibernation process to take place successfully.

Linux Kernel Concept overview 8¶

- All definitions are here

- Huge Pages. are page tables for processes that are very large. Different data structures are used for address translation for huge pages such as HugeTLB and Transparent HugeTLB.

- Zones. Partial spaces of the physical memory, are usually grouped by access requirements such as ZONE_DMA (direct memory access), ZONE_NORMAL, and ZONE_HIGHMEM (high memory).

- Nodes: Partial spaces of the physical memory, are usually grouped by physical proximity to the CPU. Each node has its own memory management subsystem. that is, each node has its own set of zones, page tables, and other data structures.

- NUMA (Non-Uniform Memory Access): is a memory architecture that allows different nodes to have different access times to the memory. This is usually the case when the memory is distributed across multiple CPUs.

- Page cache. is a cache of pages that are used to store the contents of files that are currently in use. The page cache is used to speed up access to files.

- Anonymous memory. is a memory that is not associated with any file. It is used to store the contents of the heap and stack.

- Reclaimable memory. is a memory that can be reclaimed by the kernel. It is when the page (memory chunk) has a copy of the contents somewhere else or is not currently in use. examples:

- A Page cache. where the file (cached by this page) is not currently in use.

- An Anonymous memory. where the memory is not currently in use.

- Compaction. is a process of moving the contents of the memory to a different location in the memory to avoid fragmentation and concentrate the free memory in one block at the end.

- OOM Killer. When the system runs out of memory, the OOM killer is invoked to kill the process that is using the most memory. The OOM killer is the last resort to prevent the system from crashing.

Unix file system 9¶

- File. is a collection of data items stored on a disk. It is the last object in the file system tree.

ls -lis a command to list details about files and folders, and the result:

drwxr-xr-x 3 root root 4096 Apr 4 2018 acpi

-rw-r--r-- 1 root root 3028 Apr 4 2018 adduser.conf

| file mode | number of references | owner | group | size | last modified | file name |

|---|---|---|---|---|---|---|

| drwxr-xr-x | 3 | root | root | 4096 | Apr 4 2018 | acpi |

| -rw-r–r– | 1 | root | root | 3028 | Apr 4 2018 | adduser.conf |

- File mode. is a 10-character string that describes the file type and permissions.

drwxr-xr-x:- group 1:

dis the file type,dmeans directory. - group 2:

rwxis the permission for the owner. - group 3:

r-xis the permission for the group. - group 4:

r-xis the permission for the others.

- group 1:

- File type:

-is a regular file.dis a directory.lis a symbolic link.bis a block device.cis a character device.sis a socket.pis a named pipe.

- File permission:

ris read permission.wis write permission.xis execute permission.-is no permission.

- File system types supported by Linux:

- Ext2. is the original Linux file system.

- Ext3. is Ext2 with journalling. supports POSIX ACLs (access control lists).

- IsoFs. is the file system used for CD-ROMs.

- Proc. is a virtual file system that provides information about processes, devices, and other system resources.

- NFS. is the file system used for network file systems.

- NFTS. is the file system used for Windows NT.

- To find info about the file system type, use

df -Tormountcommands.

References¶

-

Arpaci-Dusseau, R. H., & Arpaci-Dusseau, A. C. (2018). Operating systems: three easy pieces (1.01 ed.). Arpaci-Dusseau Books. Retrieved June 16, 2022, from https://pages.cs.wisc.edu/~remzi/OSTEP/. Chapter 18. ↩

-

Arpaci-Dusseau, R. H., & Arpaci-Dusseau, A. C. (2018). Operating systems: three easy pieces (1.01 ed.). Arpaci-Dusseau Books. Retrieved June 16, 2022, from https://pages.cs.wisc.edu/~remzi/OSTEP/. Chapter 19: Paging: Faster Translations (TLBs). ↩

-

Arpaci-Dusseau, R. H., & Arpaci-Dusseau, A. C. (2018). Operating systems: three easy pieces (1.01 ed.). Arpaci-Dusseau Books. Retrieved June 16, 2022, from https://pages.cs.wisc.edu/~remzi/OSTEP/. Chapter 20: Paging: Smaller Tables. ↩

-

Arpaci-Dusseau, R. H., & Arpaci-Dusseau, A. C. (2018). Operating systems: three easy pieces (1.01 ed.). Arpaci-Dusseau Books. Retrieved June 16, 2022, from https://pages.cs.wisc.edu/~remzi/OSTEP/. Chapter 21: Beyond Physical Memory: Mechanisms. ↩

-

Arpaci-Dusseau, R. H., & Arpaci-Dusseau, A. C. (2018). Operating systems: three easy pieces (1.01 ed.). Arpaci-Dusseau Books. Retrieved June 16, 2022, from https://pages.cs.wisc.edu/~remzi/OSTEP/. Chapter 22: Beyond Physical Memory: Policies. ↩

-

Arpaci-Dusseau, R. H., & Arpaci-Dusseau, A. C. (2018). Operating systems: three easy pieces (1.01 ed.). Arpaci-Dusseau Books. Retrieved June 16, 2022, from https://pages.cs.wisc.edu/~remzi/OSTEP/. Chapter 23: Complete Virtual Memory Systems. ↩

-

Buzdar, K. (2020). What is swap memory in linux? Linux Hint. https://linuxhint.com/swap_memory_linux/ ↩

-

Concepts overview. (n.d.). The Linux Kernel. https://www.kernel.org/doc/html/v5.7/admin-guide/mm/concepts.html ↩

-

Gite, V. (2018, December 23). Understanding UNIX / linux file systems. Cyberciti. https://www.cyberciti.biz/tips/understanding-unixlinux-file-system-part-i.html ↩