Elements of Programming Languages¶

1 Lecture¶

Backus Naur Form (BNF)¶

- John Backus (inventor of Fortran) and Peter Naur defined the BNF notation in 1959.

- BNF is a formal mathematical way to describe the syntax of a programming language, thus defines a way to validate the structure of programming language.

- EBNF is an extension of the BNF allowed for more compact and readable notation.

- Some symbols in BNF:

?- zero or one (optional).*- zero or more (any number, can be skipped).+- one or more (at least one).()- grouping elements together so they can be treated as a single token..- one and only one (exactly one).~- not (negation), the element cannot be present...- range of values.

- Example:

INT: '0'..'9'+;which means thatINTis a sequence of one or more digits (0-9). - The language in BNF has the following components:

- Terminal symbols - the vocabulary of the language, the smallest symbols that form its sentences. terminal because they can not be substituted by other symbols. example:

if, ==. - Non-terminal symbols - symbols that can be defined in terms of other symbols. example:

expression, statement, condition. - Production rules - define how non-terminal symbols can be defined in terms of other symbols. example: .

- Start symbol - the symbol that defines the entire language.

- Terminal symbols - the vocabulary of the language, the smallest symbols that form its sentences. terminal because they can not be substituted by other symbols. example:

- The syntactic equations of the EBNF generate a Context-Free Grammar (CFG).

- According to Avram Noam Chomsky, Context-Free means that the left-hand side of the production rule can be replaced by the right-hand side regardless of the context in which it appears.

Compliers and Interpreters¶

- Both Complier and Interpreter are programs that translate a program written in a high-level language into a low-level language.

- The Interpreter translates the program line by line every time the program is executed, while the Complier translates the program only once and then executes the translated program as many times as needed.

- Analogues:

- Interpreter: real-time interpreter in a conversation between two people (the translation is done every time).

- Complier: translating a book from one language to another and then printing the translated book many many times (the translation is done only once).

- Some compliers/interpreters do not produce machine code directly, instead they produce an intermediate code that is then translated into machine code by another compiler/interpreter.

- Some Compliers do single pass over the code, while others do multiple passes.

- The fundamental steps are the same between the compliers/interpreters, but the order of the steps may vary or the details of work within each step may vary depending on the language.

- There are 4 steps in Compilation/Interpretation process: Lexical Analysis, Syntax Analysis, Type Checking, Code Generation:

- Lexical Analysis:

- The input is a sequence of characters, that is, the content of the source code file as text.

- The input is divided into tokens (words) that are separated by spaces.

- The output is a sequence of tokens.

- Syntax Analysis:

- The input is a sequence of tokens.

- The input is validated according to the language rule sets (BNF or EBNF compatible rules).

- Two available approaches: Top-Down and Bottom-Up analysis.

- A parse tree is a data structure that represents the syntactic structure of the program.

- The output is a an abstract syntax tree (AST).

- Type Checking: (part of the semantic analysis)

- The input is the syntax tree.

- Extra validation is done to ensure that the types of the variables are correct.

- There are different approaches to type checking: Static and Dynamic.

- The output is a Annotated AST.

- Other semantic analysis tasks include: scope checking and name binding, Access checks e.g. private? public?, etc.

- Code Generation:

- The input is a typed parse tree.

- Tokens in the syntax tree are translated into the target language’s instructions.

- The generated code is machine code or intermediate code and may go through additional steps before being executed, like optimization.

- The final output is machine code that can be executed by the machine.

- Lexical Analysis:

Type Checking¶

- Type is a meta data for a memory location that classifies that the kind of data stored in that memory location.

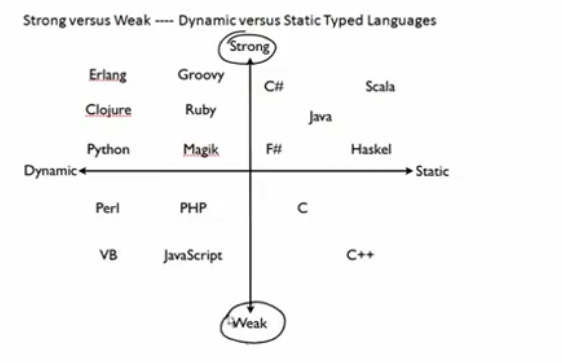

- Strong vs Weak typing:

- Strong typing prevents doing operations on mismatched types without explicit conversion.

- Weak typing may allow for implicit conversion between types when doing operations on mismatched types. .e.g

1 + "1" = 2or"1" + 1 = "11".

- Static vs Dynamic typing:

- Static typing checks the types of variables at compile time, that is, the type of each variable must be declared before variables are used or assigned values.

- Dynamic typing checks the types of variables at run time.

- Type Inference is a feature of some programming languages that allows the type of a variable to be inferred from the context in which it is used, e.g.

var x = 1will infer thatxis an integer orif (x) { ... }will infer thatxis a boolean. - Hindley-Milner is a type inference algorithm that can infer the most general type of a function without any type annotations.

Virtual Machines Model (VM)¶

- The VM model is a model that allows for the execution of programs on an emulated machine.

- A hypervisor is a program that controls access of an operating system to the hardware resources of the machine.

- Types of VMs:

- Type1:

- The hypervisor is global, that is, it is the first program that runs on the machine before the operating system.

- Controls the access of the host OS to the hardware.

- Example: VMWare, Xen, Hyper-V.

- Type2:

- The hypervisor is local, that is, it is a program that runs on the operating system.

- Controls the access of the guest OS to the Host OS, and then to the hardware.

- Example: Oracle VirtualBox.

- Type1:

- Java Virtual Machine (JVM):

- It creates an instance of an emulated machine that can execute Java programs called Java Runtime Environment (JRE).

- Java programs are compiled (using JavaC compiler) into bytecode that can be executed by the JVM.

Context-Free Grammars (CFG)¶

- A CFG is a set of production rules that define the syntax of a language.

- The general rule is

LSH -> RHSwhereLSH(left hand symbol) is a non-terminal symbol andRHS(Right Hand Symbol(s)) is a sequence of terminal and non-terminal symbols. - Example rule:

while-statement -> 'while' expression 'do' statement-list 'end;'. - Derivation:

- It is the process of applying the production rules to a set of tokens to detect if they are legal or not.

- We start with a non terminal called the start symbol that represents the LHS.

- We start deriving the RHS either from the left or from the right.

- In Top-Down derivation, we start from the start symbol and derive the RHS from the left, also called LL Parsing.

- In Bottom-Up derivation, we start from the RHS and derive the LHS from the right, also called LR Parsing.

2 Programming Environments¶

- A Language is a set of rules for writing programs. The programs as sequences of symbols that conform to the rules of the language.

- Programming environment: is the program and the set of tools that transform the program into an executable program.

- Programming environment components:

- Editor: is a program that allows the programmer to enter the program source code.

- Compiler: is a program that translates the source code into machine code.

- Librarian: is a program that manages the libraries of the program.

- Linker: is a program that links the libraries to the program to form an executable program.

- Loader: is a program that loads the executable program into memory.

- Debugger: is a program that allows the programmer to execute the program step by step and inspect the values of variables.

- Profiler: is a program that measures the time spent within each component of the program.

- Testing Tools: are programs that test the program for correctness (usually automated).

- Configuration Tools: are programs that manage the versions of the program.

- Interpreter: is a program that executes the program directly without the need for compilation.

- Integrated Development Environment (IDE): is a program that integrates all the components of the programming environment into one program.

Compiler¶

- When saying language 1 is more efficient than a language 2, means that:

- The compiler of Language 1 emits more efficient code than the compiler of Language 2.

- The constructs of Language 1 may be easier to compile than the constructs of Language 2.

- Components of a compiler:

- Front end that accepts the source code and includes:

- Lexical Analyzer: that is responsible for the lexical analysis.

- Syntax Analyzer: that is responsible for the lexical analysis and parsing.

- Semantic Analyzer: that is responsible for the semantic analysis.

- The output is an Intermediate Representation (IR).

- FrontEnd is language dependent.

- Backend that accepts the IR and:

- Emits the machine code specific to the target machine.

- Backend is machine dependent.

- Compiler vendors usually generates different backends for different machine architectures.

- Or can use a specific IR compiler, and FrontEnd generate IR that is compatible with this compiler.

- Other backend parts: Optimizer, that can do general optimizations, computer specific optimizations, Peephole optimizations.

- Optimizers are error prone, they can make incorrect assumptions about the program and generate incorrect code.

- If the compiler is to optimize the code, all tests must be done on the optimized code, not the original code.

- Front end that accepts the source code and includes:

Librarian¶

- A library is a collection of pre-compiled code that can be linked to the program, may be written in one or more files (modules).

- The essential set of procedures needed for initialization, memory management, expression evaluation, etc., is called the run-time system.

Linker¶

- The linker is a program that links the libraries to the program to form an executable program.

- Each module/external library have some exported symbols (functions, variables, etc.) that can be used by other modules.

- When an an exported symbol is used, the linker must locate the module that contains the symbol and link it to the program.

- If the program contains large number of small modules, compiling becomes faster, but linking is relative to the number of modules so it becomes slower and a bottleneck.

- To avoid extensive linking time, proposed solutions include:

- Dynamic linking: the linker links the program to the libraries at run-time, not compile-time.

- Subsystem linking: group up modules into larger subsystems, and link the program to the subsystems instead of the modules.

- Dynamic loading: the linker loads the modules into memory only when they are needed, not at compile-time.

Loader¶

- The loader is a program that loads the executable program into memory and initializes the run-time system.

- It used to be a complex process when the memory was addressed directly, but now it is a simpler process because of virtual memory.

- Where the loader loads the program at an address relative to the starting memory address of the program.

Debugger¶

- Functions of debugger:

- Tracing: precisely control the execution of the program and execute it step by step.

- Breakpoints: allows the programmer to stop the execution of the program at a specific point or watchpoint which tracks a memory location and stops the program when it is accessed.

- Examine/modify Data: allows the programmer to examine the values of variables and modify them.

- Symbolic Debuggers refers to variables by their symbols in the code rather than their memory addresses which requires the work of the compiler and linker to create a symbol table that maps the symbols to their memory addresses.

Profiler¶

- A profiler is a program that measures the time spent within each component of the program.

- Most attempts to improve performance achieves nothing,so it is important to use profiler to identify the bottlenecks in the program and make sure that your optimization efforts are focused on the right places.

Testing tools¶

- Regression testing: is the process of testing the program after each change to make sure that the change did not introduce any errors.

Configuration tools¶

- Version control: is the process of managing the different versions of the program.

- Make: is a program that manages the compilation of the program using little efforts by caching the results of previous compilations and only recompiling the parts that have changed.

Interpreter¶

- An interpreter is a program that executes the program directly without the need for compilation.

- Interpreter advantages:

- It does not require compilation, so it is faster to start.

- No need to the set of complex tools that are needed for compilation (compiler, linker, loader, etc.).

- Easy to write as the generated programs are not machine dependent as they run on directly on the host machine.

- Does not do any optimizations, so it is easier to debug.

- Interpreted Languages: Prolog and Pascal.

3 Compiler Construction¶

1. Introduction¶

- Learning how to build a compiler is a good way to learn about programming languages.

- Two restrictions:

- The source language must be small but have the fundamental features of a programming language. A subset of Oberon is used.

- The target computer should be simple and uses the RISC architecture.

- We don’t care about deep optimizations.

- The first compiler was for Fortran in 1956 which took 18 person-years to develop.

- The translation process essentially consists of the following parts:

- Lexical Analysis: the source program is divided into tokens.

- Syntax Analysis (Parsing): the tokens are grouped into syntactic structures, called Abstract Syntax Trees (AST).

- Type Checking: the AST then checked for type correctness.

- Code Generation: the AST is translated into machine code or IR code.

- The compiler is divided into two parts:

- Frontend: that includes the first three parts of the translation process.

- Backend: that includes the last part of the translation process (code generation and optimizations).

- The division of the complier helped in decoupling the frontend from the backend, so that the frontend can be used for different languages and the backend can be used for different machines; which lead to the development of multiple frontend(s) for the same backend and vice versa.

2. Language and Syntax¶

- E,T,F, and V stands for Expression, Term, Factor, and Variable respectively.

- The language hs the following components:

- Terminal Symbols: atomic symbols that cannot be divided into smaller symbols.

- Non-terminal Symbols: symbols that can be divided into smaller terminal or non-terminal symbols.

- Syntactic Equations or production rules: rules that define the syntax of the language.

- The start symbol: the symbol that represents the whole program.

-

The BNF grammar for the language is as follows:

syntax = production syntax | ∅. production = identifier "=" expression "." . expression = term | expression "|" term. term = factor | term factor. factor = identifier | string. identifier = letter | identifier letter | identifier digit. string = stringhead """. stringhead = """ | stringhead character. letter = "A" | ... | "Z". digit = "0" | ... | "9". -

The idea of defining languages and their grammar with mathematical precision goes back to N. Chomsky.

3. Regular Languages¶

- The term Context free created by Chomsky, and it describes the assignment statement as RHS can always be replaced by the derivation of the LHS, and for symbols in LHS that are not variables, they can be replaced by any string of symbols according to the grammar of the language.

- Difference between Lexical and Syntax analysis:

| Aspect | Lexical Analysis | Syntax Analysis |

|---|---|---|

| Input | Characters | Tokens or Symbols |

| Output | Tokens | AST |

| Algorithm | Scanner | Parser |

| Syntax | Regular Language | Context Free Language |

- Regular languages can not use recursion,

4. Analysis of Context-free Languages¶

- Approaches:

- The method of Recursive Descent.

- Table-driven Top-down Parsing.

- Bottom-up Parsing.

References¶

-

UoPeople. 2023. CS 4402-01 Comparative Programming Languages - AY2023-T5. Unit 2 Video Lectures. ↩

-

Ben-Ari, M. (2006). Understanding programming languages. Weizman Institute of Science. Chapter 3: Programming Environments. ↩

-

Wirth N. (2017). Compiler Construction. https://people.inf.ethz.ch/wirth/CompilerConstruction/CompilerConstruction1.pdf ↩