8. Per-Pixel Operations¶

Introduction 1¶

- Line scanning:

- Each pixel is scanned one by one.

- Each pixel has one color.

- Pixels are lit using the line fragments that intersected the polygons within the scan line.

- This unit will explore a very different approach to determining how pixels for a scene are calculated and lighted as we introduce two new approaches:

- Ray casting.

- Ray tracing.

- Ray Casting:

- We invert the typical process of lighting.

- We follow a stream of photons or a ‘Ray’ of light that enters our eyes and strikes the retina to form a picture.

- We follow that ‘Ray’ of light back to its source to the object that reflected light to our eyes.

- We followed that ray back to its source to determine its intensity of light and the intensity of each of the primary colors forming that particular ray of light.

- Ray casting essentially follows a ray of light (and there is 1 ray of light for every pixel in the display) back to its source, which is the object that the light reflected off from or the background that has been placed in the scene and determines the intensity of the primary colors required to produce the image.

- The intensity of the pixel colors is computing from just 1 ray. As such, ray casting does not account well for the complex interaction of different lighting sources and the reflection of those various light sources from the scene’s objects.

- Ray Tracing:

- It begins with light rays that begin at the pixel and trace backward to light sources and objects.

- The impact of multiple (perhaps even many) rays are incorporated into the computation to incorporate reflection, refraction, shadows, and multiple lighting sources; hence, it is different from ray casting.

- It generates a more realistic image than ray casting, but it is computationally more expensive.

- Reflection:

- It is the process of light hitting an object.

- We know that the object absorbs some of the light frequencies and repels others.

- This repelling or reflecting of some photons is what produces color.

- Some materials (such as a mirror) reflect most if not all colors.

- The angle that the light strikes the surface will determine how the light will reflect off of the surface.

- There are two types of reflection specular and diffuse.

- In specular reflection, the light is reflected much like a mirror.

- In diffuse reflection, the light is altered in some way.

- Refraction:

- It is the effect of light rays moving through a material that is transparent or semi-transparent.

- Example: looking through a glass of water. Light rays move through the glass (transparent) and the water (transparent).

Basic Ray Tracing 2¶

- Assumptions:

- Assume fixed viewpoint (camera position).

- Assume fixed camera orientation.

- Assume the frame has fixed dimensions (Vw * Vh), and it is frontal to the camera (perpendicular to the Z-axis).

- Assume the frame is at a fixed distance from the camera (d).

- Assume W = h = d = 1. This means the FOV (field of view) is 53 degrees.

- For each pixel (Cx,Cy) on the canvas, we can determine its corresponding point on the viewport (Vx,Vy,Vz).

- Vx = Cx * (Vw/Cw).

- Vy = Cy * (Vh/Ch).

- Vz = d.

- Next, to determine the color of the light at the point (Vx,Vy,Vz), as we see it from the camera point (Ox,Oy,Oz), we can:

- In real life, we should do photon tracing: follow photons emitting from the light source and then bouncing off objects until they reach the camera.

- Photon tracing is expensive, and not widely used.

- Instead, we do the opposite: ray tracing.

- We start with a ray originating from the camera going through a point in the viewport, nad see what object it hits first.

- The color would be the color of light coming from that object.

- The ray tracing algorithm:

O = (0, 0, 0)

for x = -Cw/2 to Cw/2 {

for y = -Ch/2 to Ch/2 {

D = CanvasToViewport(x, y)

color = TraceRay(O, D, 1, inf)

canvas.PutPixel(x, y, color)

}

}

- Here are the details that are missing from the algorithm:

CanvasToViewport(x, y)is a function that converts canvas coordinates to viewport coordinates.TraceRay(O, D, t_min, t_max)is a function that traces a ray from the originOin directionDand returns the color of the closest object that intersects the ray betweent_minandt_max.IntersectRaySphere(O, D, sphere)is a function that returns the two values oftat which the ray(O, D)intersects the spheresphere.BACKGROUND_COLORis the color of the background.

CanvasToViewport(x, y) {

return (x*Vw/Cw, y*Vh/Ch, d)

}

TraceRay(O, D, t_min, t_max) {

closest_t = inf

closest_sphere = NULL

for sphere in scene.spheres {

t1, t2 = IntersectRaySphere(O, D, sphere)

if t1 in [t_min, t_max] and t1 < closest_t {

closest_t = t1

closest_sphere = sphere

}

if t2 in [t_min, t_max] and t2 < closest_t {

closest_t = t2

closest_sphere = sphere

}

}

if closest_sphere == NULL {

return BACKGROUND_COLOR

}

return closest_sphere.color

}

IntersectRaySphere(O, D, sphere) {

r = sphere.radius

CO = O - sphere.center

a = dot(D, D)

b = 2*dot(CO, D)

c = dot(CO, CO) - r*r

discriminant = b*b - 4*a*c

if discriminant < 0 {

return inf, inf

}

t1 = (-b + sqrt(discriminant)) / (2*a)

t2 = (-b - sqrt(discriminant)) / (2*a)

return t1, t2

}

Real-Time Ray Tracing 3¶

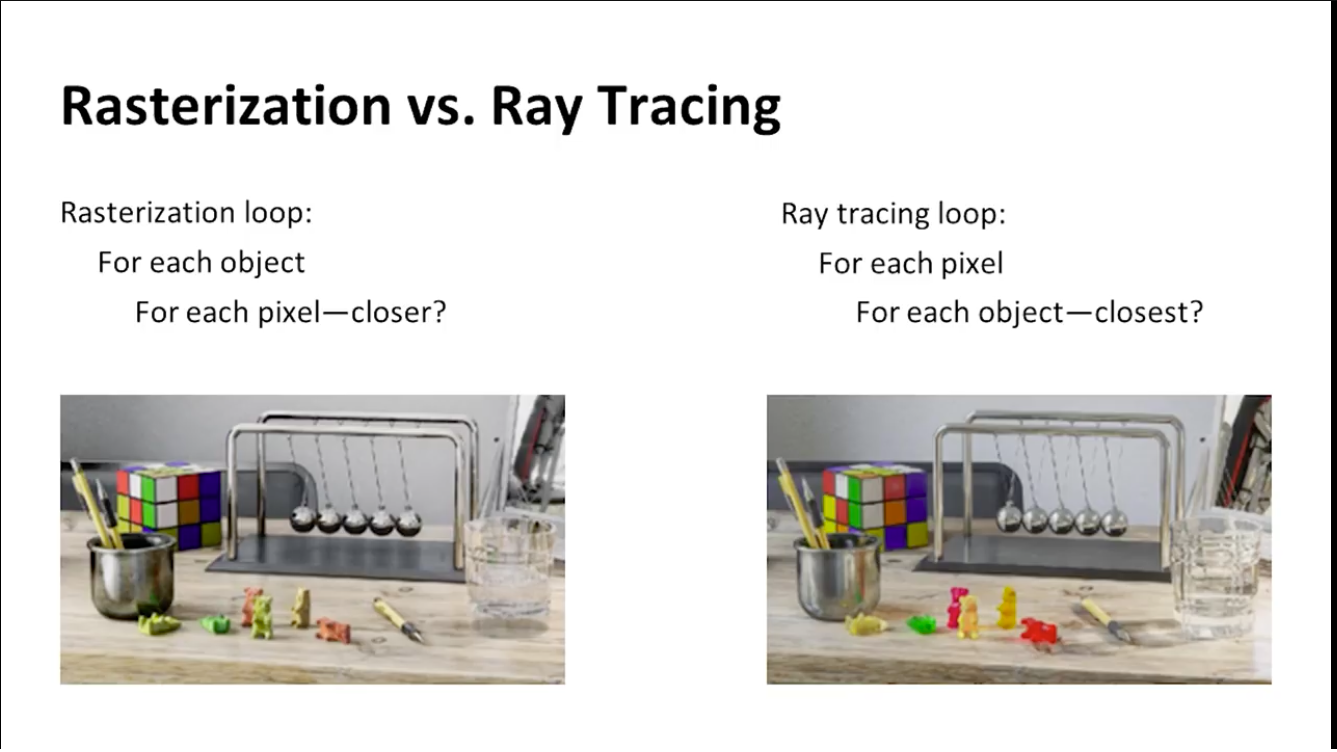

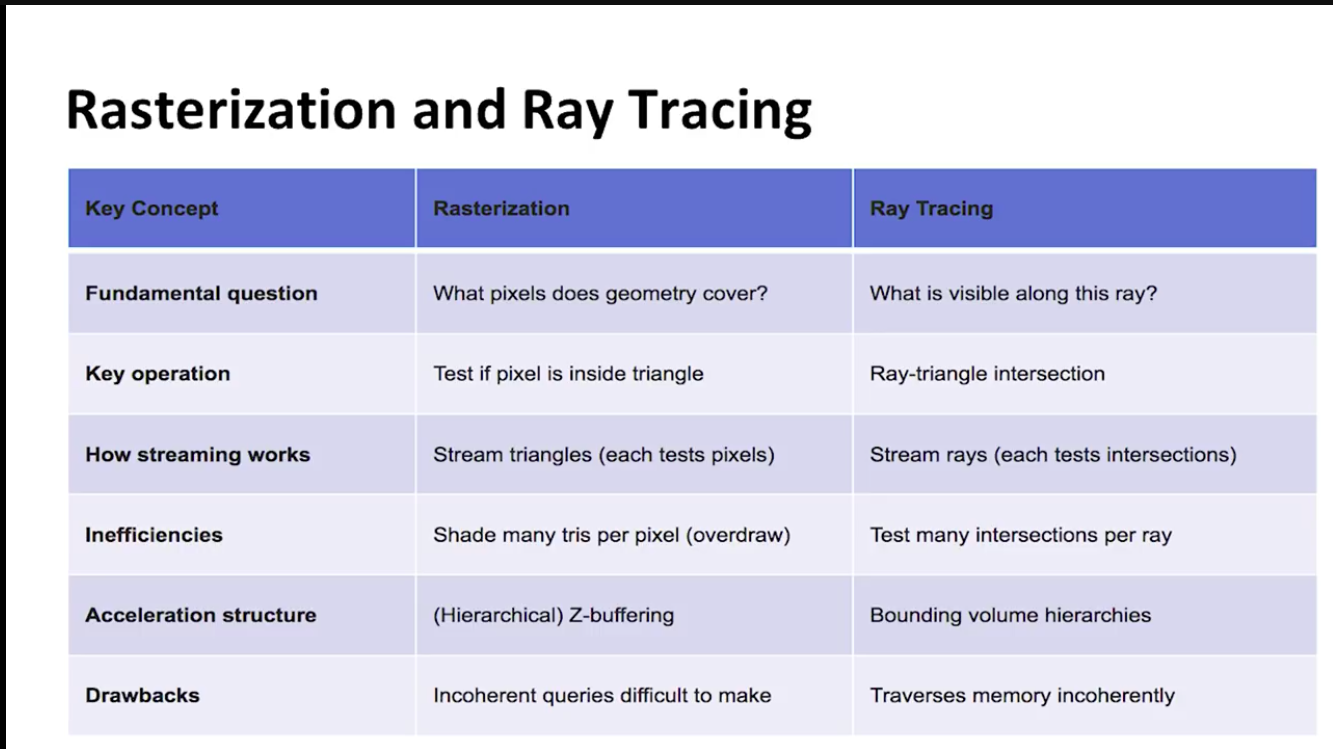

- Computer graphics knows two different technologies for generating a 2D image from 3D scene description: rasterization and ray tracing.

Rasterization¶

- The dominant algorithms for interactive computer graphics today.

- The rasterization algorithm implemented in all graphic chips.

- It conceptually takes a single triangle at a time, projects it to the screen and paints all covered pixels (subject to the Z-buffer and other test and more or less complex shading computations).

- Because the hardware has no knowledge about the scene it must process every triangle leading to a linear complexity with respect to scene size: Twice the number of triangles leads to twice the rendering time.

- The basic operation of rasterization is sequential:

- Sequentially project each triangle sent by the application and projecting it to the 2D screen.

- Then the pixel covered by the triangle are computed and each one is colored according to some programmable shader functions.

Ray tracing¶

- It starts by shooting rays for each pixel into the scenes and uses advanced spatial indexes (aka. acceleration structures) to quickly locate the geometric primitive that is being hit.

- Because these indexes are hierarchical they allow for a logarithmic complexity: Above something like 1 million triangles the rendering time hardly changes any more.

- Steps:

- Ray generation: generate a ray from the camera to each pixel in the 2D viewport.

- Ray traversal: takes the ray and traverses the spatial index to locate the first hit point of the ray with a geometric object.

- Intersection: computes the exact intersection of the ray with the geometric primitive (e.g. triangle).

- Shading:

- Compute the color of the returned light ray; aka, compte pixel color.

- We may need to send some shadow rays to determine if the point is in shadow, and if we need to adjust the color accordingly.

- For refraction or reflection, new rays are send to find out how much light arrived from that particular directions.

- Frame buffer update.

- Advantages:

- Highly realistic images by default.

- Physical correctness and dependability.

- Support for massive scenes.

- Integration of many different primitive types.

- Declarative scene description.

- Realtime global illumination.

- Fully ray traced car head lamp, faithful visualization requires up to 50 rays per pixel

Ray Casting and Rendering 4¶

- “Rendering” refers to the entire process that produces color values for pixels, given a 3D representation of the scene.

- Barycentric Definition of a Plane:

- A (non-degenerate) triangle (a,b,c) defines a plane.

- Any point P on this plane can be written as P(A,B,C) = Aa + Bb + Cc, with A+B+C = 1.

Rasterization vs Ray Tracing 5¶

- Time complexity:

- Rasterization: O(N) complexity.

- Ray tracing: Log (N) complexity.

- N is the number of triangles in the scene.

Linear Algebra 6¶

- A point represents a position within a coordinate system.

- A vector represents the difference between two points. Intuitively, imagine a vector as an arrow that connects a point to another point; alternatively, think of it as the instructions to get from one point to another.

- Vectors are characterized by their direction (the angle in which they point) and their magnitude (how long they are).

- The direction can be further decomposed into orientation (the slope of the line they’re on) and sense (which of the possible two ways along that line they point).

- You can compute the magnitude of a vector from its coordinates. The magnitude is also called the length or norm of the vector. It’s denoted by putting the vector between vertical pipes, as in \(|\vec{V}|\).

- \(|\vec{V}| = \sqrt{V_x^2 + V_y^2 + V_z^2}\).

- A vector with a magnitude equal to 1.0 is called a unit vector.

References¶

-

Learning Guide Unit 8: Introduction | Home. (2025). Uopeople.edu. https://my.uopeople.edu/mod/book/view.php?id=444316&chapterid=540632 ↩

-

Gambetta, G. (n.d.). Basic raytracing - computer graphics from scratch. https://gabrielgambetta.com/computer-graphics-from-scratch/02-basic-raytracing.html ↩

-

Slusallek, P., Shirley, P., Mark, B., Stoll, G., & Wald, I. (N.D.). Introduction to realtime ray tracing. SIGGRAPH2005. http://www.eng.utah.edu/~cs6965/papers/a1-slusallek.pdf ↩

-

Massachusetts Institute of Technology (2020). Coordinates and Transformations. MITOpenCourseware.https://ocw.mit.edu/courses/electrical-engineering-and-computer-science/6-837-computer-graphics-fall-2012/lecture-notes/. (Original work published 2001). Read Lecture #11: Ray Casting and Rendering. Read Lecture #12: Ray Casting II. Read Lecture #13: Ray Tracing. ↩

-

NVIDIA Developer. (2020, February 27). Ray tracing essentials part 2: Rasterization versus ray tracing [Video]. YouTube. https://youtu.be/ynCxnR1i0QY ↩

-

Linear Algebra - Computer Graphics from Scratch - Gabriel Gambetta. (2024). Gabrielgambetta.com. https://gabrielgambetta.com/computer-graphics-from-scratch/A0-linear-algebra.html ↩