DA7. Perceptron and Back Propagation¶

Statement¶

In your own words discuss the differences in training between the perceptron and a feed forward neural network that is using a back propagation algorithm.

Solution¶

Both Perceptron and Feed Forward Neural Network (FFNN) are types of neural networks that are used for classification problems;

Perceptron¶

Perceptron is a binary classifier was introduced by Frank Rosenblatt in 1957. Usually contains a single internal layer along with an input and output layers (Bhanola, 2023).

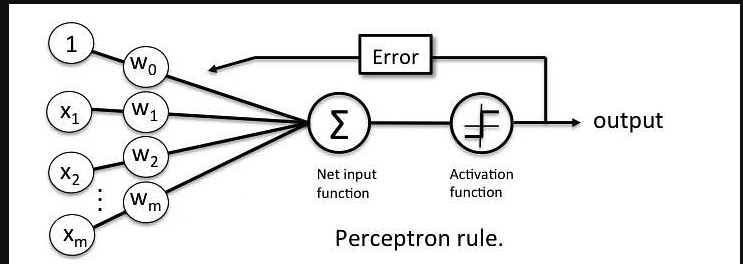

The internal layer accepts inputs and applies wights on them, and then the result is passed through an activation function that decides if particular output neuron connected to this current neuron should be turned on or off (binary response).

Perceptron is -usually- a linear classifier, that best suits linear classification problems. Errors are calculated and passed back to the internal layer to adjust the weights.

The image below from (Bhanola, 2023) shows a perceptron with m inputs (and weights) and 1 output.

Feed Forward Neural Network¶

Feed Forward Neural Network (FFNN) is a type of neural network that has an input layer, an output layer, and one or more hidden layers. The input layer receives the input data, and passes it to the first hidden layer, which in turn processes the data and passes it to the next hidden layer, and so on until the output layer is reached (Turing, n.d).

Processed inputs travels throw the layers in one direction, from the input layer to the output layer, hence the name feed forward; however, errors are calculated and passed back to the previous layers to adjust the weights (back propagation).

In other words, feed-foreword is a multi-layer perceptron (MLP) with one or more hidden layers (Turing, n.d).

FFNN is a non-linear classifier, that best suits non-linear classification problems.

FFNN are usually more computing intense than perceptrons, as they have more layers and more neurons.

Conclusion¶

We saw that FFNNs are usually more complex than Perceptron in terms of problems they solve, computing intensively, and the number of layers in the network. It is usually not bad to start with simple Perceptron model and then move towards more complex as your problem requires.

References¶

- Banola M. (2023). What is Perceptron: A Beginners Guide for Perceptron. https://www.simplilearn.com/tutorials/deep-learning-tutorial/perceptron

- Turing. (n.d). Understanding Feed Forward Neural Networks With Maths and Statistics. https://www.turing.com/kb/mathematical-formulation-of-feed-forward-neural-network